Storage multipathing

Dynamic multipathing support is available for Fibre Channel and iSCSI storage back-ends.

XenServer uses Linux native multipathing (DM-MP), the generic Linux multipathing solution, as its multipath handler. However, XenServer supplements this handler with additional features so that XenServer can recognize vendor-specific features of storage devices.

Configuring multipathing provides redundancy for remote storage traffic if there is partial connectivity loss. Multipathing routes storage traffic to a storage device over multiple paths for redundancy and increased throughput. You can use up to 16 paths to a single LUN. Multipathing is an active-active configuration. By default, multipathing uses either round-robin or multibus load balancing depending on the storage array type. All routes have active traffic on them during normal operation, which results in increased throughput.

Important:

We recommend that you enable multipathing for all hosts in your pool before creating the SR. If you create the SR before enabling multipathing, you must put your hosts into maintenance mode to enable multipathing.

NIC bonding can also provide redundancy for storage traffic. For iSCSI storage, we recommend configuring multipathing instead of NIC bonding whenever possible.

Multipathing is not effective in the following scenarios:

- NFS storage devices

- You have limited number of NICs and need to route iSCSI traffic and file traffic (NFS or SMB) over the same NIC

In these cases, consider using NIC bonding instead. For more information about NIC bonding, see Networking.

Prerequisites

Before enabling multipathing, verify that the following statements are true:

-

Multiple targets are available on your storage server.

For example, an iSCSI storage back-end queried for

sendtargetson a given portal returns multiple targets, as in the following example:iscsiadm -m discovery --type sendtargets --portal 192.168.0.161 192.168.0.161:3260,1 iqn.strawberry:litchie 192.168.0.204:3260,2 iqn.strawberry:litchieHowever, you can perform additional configuration to enable iSCSI multipath for arrays that only expose a single target. For more information, see iSCSI multipath for arrays that only expose a single target.

-

For iSCSI only, the control domain (dom0) has an IP address on each subnet used by the multipathed storage.

Ensure that for each path you want to have to the storage, you have a NIC and that there is an IP address configured on each NIC. For example, if you want four paths to your storage, you must have four NICs that each have an IP address configured.

-

For iSCSI only, every iSCSI target and initiator has a unique IQN.

-

For iSCSI only, the iSCSI target ports are operating in portal mode.

-

For HBA only, multiple HBAs are connected to the switch fabric.

-

When you are configuring secondary interfaces, each secondary interface must be on a separate subnet. For example, if you want to configure two more secondary interfaces for storage, you require IP addresses on three different subnets – one subnet for the management interface, one subnet for Secondary Interface 1, and one subnet for Secondary Interface 2.

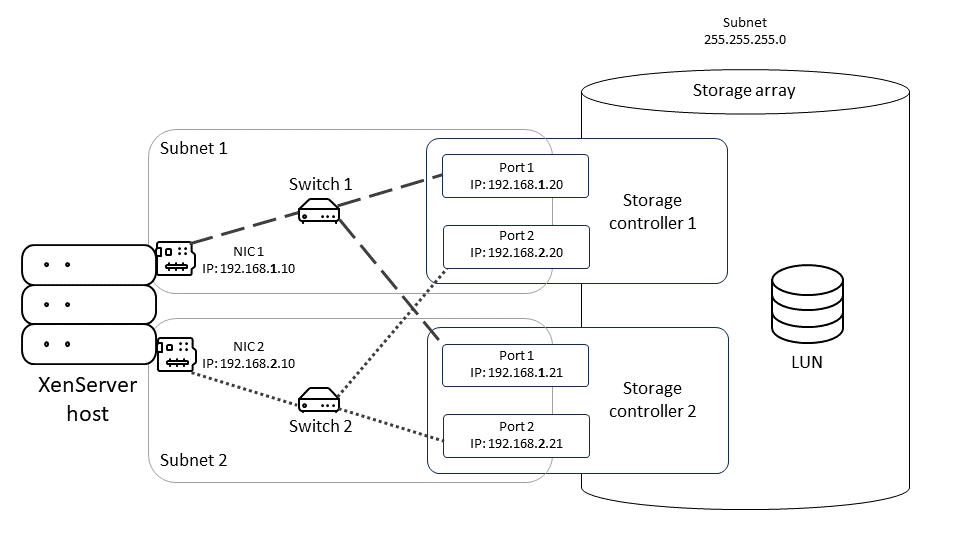

This diagram shows how both NICs on the host in a multipathed iSCSI configuration must be on different subnets. In this diagram, NIC 1 on the host along with Switch 1 and NIC 1 on both storage controllers are on a different subnet than NIC2, Switch 2, and NIC 2 on the storage controllers.

Enable multipathing

You can enable multipathing in XenCenter or on the xe CLI.

To enable multipathing by using XenCenter

-

In the XenCenter Resources pane, right-click on the host and choose Enter Maintenance Mode.

-

Wait until the host reappears in the Resources pane with the maintenance mode icon (a blue square) before continuing.

-

On the General tab for the host, click Properties and then go to the Multipathing tab.

-

To enable multipathing, select the Enable multipathing on this server check box.

-

Click OK to apply the new setting. There is a short delay while XenCenter saves the new storage configuration.

-

In the Resources pane, right-click on the host and choose Exit Maintenance Mode.

-

Repeat these steps to enable multipathing on all hosts in the pool.

Ensure that you enable multipathing on all hosts in the pool. All cabling and, in the case of iSCSI, subnet configurations must match the corresponding NICs on each host.

To enable multipathing by using the xe CLI

-

Open a console on the XenServer host.

-

Unplug all PBDs on the host by using the following command:

xe pbd-unplug uuid=<pbd_uuid> <!--NeedCopy-->You can use the command

xe pbd-listto find the UUID of the PBDs. -

Set the value of the

multipathingparameter totrueby using the following command:xe host-param-set uuid=<host uuid> multipathing=true <!--NeedCopy--> -

If there are existing SRs on the server running in single path mode but that have multiple paths:

-

Migrate or suspend any running guests with virtual disks in affected the SRs

-

Replug the PBD of any affected SRs to reconnect them using multipathing:

xe pbd-plug uuid=<pbd_uuid> <!--NeedCopy-->

-

-

Repeat these steps to enable multipathing on all hosts in the pool.

Ensure that you enable multipathing on all hosts in the pool. All cabling and, in the case of iSCSI, subnet configurations must match the corresponding NICs on each host.

Disable multipathing

You can disable multipathing in XenCenter or on the xe CLI.

To disable multipathing by using XenCenter

-

In the XenCenter Resources pane, right-click on the host and choose Enter Maintenance Mode.

-

Wait until the host reappears in the Resources pane with the maintenance mode icon (a blue square) before continuing.

-

On the General tab for the host, click Properties and then go to the Multipathing tab.

-

To disable multipathing, clear the Enable multipathing on this server check box.

-

Click OK to apply the new setting. There is a short delay while XenCenter saves the new storage configuration.

-

In the Resources pane, right-click on the host and choose Exit Maintenance Mode.

-

Repeat these steps to configure multipathing on all hosts in the pool.

To disable multipathing by using the xe CLI

-

Open a console on the XenServer host.

-

Unplug all PBDs on the host by using the following command:

xe pbd-unplug uuid=<pbd_uuid> <!--NeedCopy-->You can use the command

xe pbd-listto find the UUID of the PBDs. -

Set the value of the

multipathingparameter tofalseby using the following command:xe host-param-set uuid=<host uuid> multipathing=false <!--NeedCopy--> -

If there are existing SRs on the server running in single path mode but that have multiple paths:

-

Migrate or suspend any running guests with virtual disks in affected the SRs

-

Unplug and replug the PBD of any affected SRs to reconnect them using multipathing:

xe pbd-plug uuid=<pbd_uuid> <!--NeedCopy-->

-

-

Repeat these steps to disable multipathing on all hosts in the pool.

Configure multipathing

To do additional multipath configuration, create files with the suffix .conf in the directory /etc/multipath/conf.d. Add the additional configuration in these files. Multipath searches the directory alphabetically for files ending in .conf and reads configuration information from them.

Do not edit the file /etc/multipath.conf. This file is overwritten by updates to XenServer.

Multipath tools

Multipath support in XenServer is based on the device-mapper multipathd components. The Storage Manager API handles activating and deactivating multipath nodes automatically. Unlike the standard dm-multipath tools in Linux, device mapper nodes are not automatically created for all LUNs on the system. Device mapper nodes are only provisioned when LUNs are actively used by the storage management layer. Therefore, it is unnecessary to use any of the dm-multipath CLI tools to query or refresh DM table nodes in XenServer.

If it is necessary to query the status of device-mapper tables manually, or list active device mapper multipath nodes on the system, use the mpathutil utility:

mpathutil list

<!--NeedCopy-->

mpathutil status

<!--NeedCopy-->

iSCSI multipath for arrays that only expose a single target

You can configure XenServer to use iSCSI multipath with storage arrays that only expose a single iSCSI target and one IQN, through one IP address. For example, you can follow these steps to set up Dell EqualLogic PS and FS unified series storage arrays.

By default, XenServer establishes only one connection per iSCSI target. Hence, with the default configuration the recommendation is to use NIC bonding to achieve failover and load balancing. The configuration procedure outlined in this section describes an alternative configuration, where multiple iSCSI connections are established for a single iSCSI target. NIC bonding is not required.

Note:

The following configuration is only supported for servers that are exclusively attached to storage arrays which expose only a single iSCSI target. These storage arrays must be qualified for this procedure with XenServer.

To configure multipath:

-

Back up any data you want to protect.

-

In the XenCenter Resources pane, right-click on the host and choose Enter Maintenance Mode.

-

Wait until the host reappears in the Resources pane with the maintenance mode icon (a blue square) before continuing.

-

On the General tab for the host, click Properties and then go to the Multipathing tab.

-

To enable multipathing, select the Enable multipathing on this server check box.

-

Click OK to apply the new setting. There is a short delay while XenCenter saves the new storage configuration.

-

In the host console, configure two to four Open-iSCSI interfaces. Each iSCSI interface is used to establish a separate path. The following steps show the process for two interfaces:

-

Configure two iSCSI interfaces, run the following commands:

iscsiadm -m iface --op new -I c_iface1 iscsiadm -m iface --op new -I c_iface2Ensure that the interface names have the prefix

c_. If the interfaces do not use this naming standard, they are ignored and instead the default interface is used.Note:

This configuration leads to the default interface being used for all connections. This indicates that all connections are being established using a single interface.

-

Bind the iSCSI interfaces to xenbr1 and xenbr2, by using the following commands:

iscsiadm -m iface --op update -I c_iface1 -n iface.net_ifacename -v xenbr1 iscsiadm -m iface --op update -I c_iface2 -n iface.net_ifacename -v xenbr2Note:

This configuration assumes that the network interfaces configured for the control domain (including xenbr1 and xenbr2) and xenbr0 are used for management. It also assumes that the NIC cards being used for the storage network are NIC1 and NIC2. If this is not the case, refer to your network topology to discover the network interfaces and NIC cards to use in these commands.

-

-

In the XenCenter Resources pane, right-click on the host and choose Exit Maintenance Mode. Do not resume your VMs yet.

-

In the host console, run the following commands to discover and log in to the sessions:

iscsiadm -m discovery -t st -p <IP of SAN> iscsiadm -m node -L all -

Delete the stale entries containing old session information by using the following commands:

cd /var/lib/iscsi/send_targets/<IP of SAN and port, use ls command to check that> rm -rf <iqn of SAN target for that particular LUN> cd /var/lib/iscsi/nodes/ rm -rf <entries for that particular SAN> -

Detach the LUN and attach it again. You can do this in one of the following ways:

- After completing the preceding steps on all hosts in a pool, you can use XenCenter to detach and reattach the LUN for the entire pool.

-

Alternatively, you can unplug and destroy the PBD for each host and then repair the SR.

-

Run the following commands to unplug and destroy the PBD:

-

Find the UUID of the SR:

xe sr-list -

Get the list of PBDs associated with the SR:

xe pbd-list sr-uuid=<sr_uuid> -

In the output of the previous command, look for the UUID of the PBD of the iSCSI Storage Repository with a mismatched SCSI ID.

-

Unplug and destroy the PBD you identified.

xe pbd-unplug uuid=<pbd_uuid> xe pbd-destroy uuid=<pbd_uuid>

-

-

Repair the storage in XenCenter.

-

-

You can now resume your VMs.