Citrix Cloud Native Networking for Red Hat OpenShift 3.11 Validated Reference Design

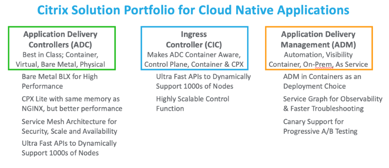

The Citrix ADC Stack fulfills basic requirements for application availability features (ADC), security features segregation (WAF), scaling of agile application topologies (SSL and GSLB), and proactive observability (Service Graph) into a highly orchestrated, Cloud Native Era, environment.

Digital Transformation is fueling the need to move modern application deployments to microservice-based architectures. These Cloud Native architectures leverage application containers, microservices and Kubernetes.

The Cloud Native approach to modern applications also transformed the development lifecycle including agile workflows, automation deployment toolsets, and development languages and platforms.

The new era of modern application deployment has also shifted the traditional data center business model disciplines including monthly and yearly software releases and contracts, silo compute resources and budget, and the vendor consumption model.

And while all this modernization is occurring in the ecosystem, there are still basic requirements for application availability features (ADC), security features segregation (WAF), scaling of agile application topologies (SSL and GSLB), and proactive observability (Service Graph) into a highly orchestrated environment.

Why Citrix for Modern Application Delivery

The Citrix software approach to modern application deployment requires the incorporation of an agile workflow across many teams within the organization. One of the advantages of agile application development and delivery is the framework known as CI/CD.

CI/CD is a way to provide speed, safety, and reliability into the modern application lifecycle.

Continuous Integration (CI) allows for a common code base that can be updated in real-time several times a day and integrate into an automated build platform. The three phases of Continuous Integration are Push, Test, Fix.

Continuous Delivery (CD) integrates the deployment pipeline directly into the CI development process, thus optimizing and improving the software delivery model for modern applications.

Citrix ADCs tie into the continuous delivery process through implementing automated canary analysis progressive rollouts.

A Solution for All Stakeholders

Citrix has created a dedicated software-based solution that addresses the cross-functional requirements when deploying modern applications and integrates the various components of observability stack, security framework, and CI/CD infrastructure.

Traditional organizations that adopt CI/CD techniques for deploying modern applications have recognized the need to provide a common delivery and availability framework to all members involved in CI/CD, these resources are generally defined as the business unit “stakeholders,” and while each stakeholder is invested in the overall success of the organization, each stakeholder generally has distinct requirements and differences.

Some common examples of stakeholders in the modern delivery activity include:

- Platforms Team—deploy data center infrastructure such as IaaS, PaaS, SDN, ADC, WAF

- DevOps and Engineering Team—develop and maintain the unified code repository, automation tools, software architecture

- Service Reliability Engineering (SRE) Team—reduce organizational silos, error management, deployment automation, and measurements

- Security Operations Team—proactive security policies, incident management, patch deployment, portfolio hardening

The Citrix Software Stack Explained

Single Code Base - it is all the same code for you - On-premises deployments, Public Cloud deployments, Private cloud deployments, GOV Cloud deployments

-

Choice of Platforms - to meet any agile requirement, choose any Citrix ADC model

- CPX – the Citrix ADC CPX is a Citrix ADC delivered as a container

- VPX – the Citrix ADC VPX product is a virtual appliance that can be hosted on a wide variety of virtualization and cloud platforms offering performance ranging from 10 Mb/s to 100 Gb/s.

- MPX – the Citrix ADC MPX is a hardware-based application delivery appliance offering performance ranging from 500 Mb/s to 200 Gb/s.

- SDX – the Citrix ADC SDX appliance is a multitenant platform on which you can provision and manage multiple virtual Citrix ADC machines (instances).

- BLX – the Citrix ADC BLX appliance is a software form-factor of Citrix ADC. It is designed to run natively on bare-metal-Linux on commercial off-the-shelf servers (COTS) Containerized Environments - create overlays and automatically configure your Citrix ADC

- Citrix Ingress Controller - built around Kubernetes Ingress and automatically configures one or more Citrix ADC based on the Ingress resource configuration

- Citrix Node Controller – create a VXLAN-based overlay network between the Kubernetes nodes and the Ingress Citrix ADC

- Citrix IPAM Controller – automatically assign the load balancing virtual server on a Citrix ADC with an IP address (virtual IP address or VIP) Pooled Capacity Licensing – one global license

- Ubiquitous global license pool decouples platforms and licenses for complete flexibility for design and performance Application Delivery Manger – the single pane of glass

- Manage the fleet, orchestrate policies and applications, monitor and troubleshoot in real-time

Flexible Topologies – traditional data center or modern clouds - Single tier, two-tier, and service mesh lite

The Citrix ADC Value

- Kubernetes and CNCF Open Source Tools integration

- The Perfect Proxy – a proven Layer7 application delivery controller for modern apps

- High performance ADC container in either a Pod or Sidecar deployment

- Low Latency access to the Kubernetes cluster using multiple options

- Feature-Rich API – easily implement and orchestrate security features without limit

- Advanced Traffic Steering and Canary deployment for CI/CD

- Proven security through TLS/SSL, WAF, DoS, and API protection

- Rich Layer 7 capabilities

- Integrated Monitoring for Legacy and Modern application deployments

- Actionable Insights and Service Graphs for Visibility

The Citrix ADC Benefits

- Move legacy apps without having to rewrite them

- Developers can secure apps with Citrix ADC policies using Kubernetes APIs (Using CRDs – developer friendly)

- Deploy high performing microservices for North-South and Service Mesh

- Use one Application Service Graph for all microservices

- Troubleshoot microservices problems faster across TCP, UDP, HTTP/S, SSL

- Secure APIs and configure using Kubernetes APIs

- Enhance CICD process for canary deployments

Architecture Components

The Citrix ADC Suite Advantage

Citrix is Choice. Whether you are living with legacy data centers and components, or have launched a new cloud native modern application, Citrix ADC integrates seamlessly into any platform requirement that you may have. We provide cloud native ADC functionality for subscription-based cloud platforms and tools, allow for the directing and orchestration of traffic into your Kubernetes cluster on-premises with easy Ingress controller orchestration, and address the Service Mesh architectures from simple to complex.

Citrix is Validated. Validated design templates and sample applications allow for the easy reference of a desired state and business requirement to be addressed quickly and completely. We have documented and published configuration examples in a central location for ease of reference across DevOps, SecOps, and Platforms teams.

Citrix is Agile and Modern. Create foundational architecture for customers to consume new features of the Citrix Cloud Native Stack with their existing ADC and new modules (CNC, IPAM, etc.)

Citrix is Open. Help customers understand our integration with Partner ecosystems. In this document we use both OpenSource CNCF tools and Citrix enterprise grade products.

Partner Ecosystem

This topic provides details about various Kubernetes platforms, deployment topologies, features, and CNIs supported in Cloud-Native deployments that include Citrix ADC and Citrix ingress controller.

The Citrix Ingress Controller is supported on the following platforms:

- Kubernetes v1.10 on bare metal or self-hosted on public clouds such as, AWS, GCP, or Azure.

- Google Kubernetes Engine (GKE)

- Elastic Kubernetes Service (EKS)

- Azure Kubernetes Service (AKS)

- Red Hat OpenShift version 3.11 and later

- Pivotal Container Service (PKS)

- Diamanti Enterprise Kubernetes Platform

Our Partner Ecosystem also includes the following:

- Prometheus – monitoring tool for metrics, alerting, and insights

- Grafana – a platform for analytics and monitoring

- Spinnaker – a tool for multi-cloud continuous delivery and canary - analytics

- Elasticsearch – an application or site search service

- Kibana – a visualization tool for elastic search data and an elastic stack navigation tool

- Fluentd – a data collector tool

The focus of this next section is design/architecture with OpenShift.

OpenShift Overview

Red Hat OpenShift is a Kubernetes platform for deployments focusing on using microservices and containers to build and scale applications faster. Automating, installing, upgrading, and managing the container stack, OpenShift streamlines Kubernetes and facilitates day-to-day DevOps tasks.

- Developers provision applications with access to validated solutions and partners that are pushed to production via streamlined workflows.

- Operations can manage and scale the environment using the Web Console and built-in logging and monitoring.

Figure 1-6: OpenShift high-level architecture.

More advantages and components of OpenShift include:

- Choice of Infrastructure

- Master and worker nodes

- Image Registry

- Routing and Service Layer

- Developer operation (introduced but is beyond the scope of this doc)

Use cases for integrating Red Hat OpenShift with the Citrix Native Stack include:

- Legacy application support

- Rewrite/Responder policies deployed as APIs

- Microservices troubleshooting

- Day-to-day operations with security patches and feature enhancements

In this document we cover how Citric ADC provides solid Routing/Service layer integration.

OpenShift Projects

The first new concept OpenShift adds is project, which effectively wraps a namespace, with access to the namespace being controlled via the project. Access is controlled through an authentication and authorization model based on users and groups. Projects in OpenShift therefore provide the walls between namespaces, ensuring that users, or applications, can only see and access what they are allowed to.

OpenShift Namespaces

The primary grouping concept in Kubernetes is the namespace. Namespaces are also a way to divide cluster resources between multiple uses. That being said, there is no security between namespaces in Kubernetes. If you are a “user” in a Kubernetes cluster, you can see all the different namespaces and the resources defined in them.

OpenShift Software Defined Networking (SDN)

OpenShift Container Platform uses a software-defined networking (SDN) approach to provide a unified cluster network that enables communication between pods across the OpenShift Container Platform cluster. This pod network is established and maintained by the OpenShift SDN, which configures an overlay network using Open vSwitch (OVS).

OpenShift SDN provides three SDN plug-ins for configuring the pod network:

- The ovs-subnet plug-in is the original plug-in, which provides a “flat” pod network where every pod can communicate with every other pod and service.

- The ovs-multitenant plug-in provides project-level isolation for pods and services. Each project receives a unique Virtual Network ID (VNID) that identifies traffic from pods assigned to the project. Pods from different projects cannot send packets to or receive packets from pods and services of a different project.

- However, projects that receive VNID 0 are more privileged in that they are allowed to communicate with all other pods, and all other pods can communicate with them. In OpenShift Container Platform clusters, the default project has VNID 0.This facilitates certain services, such as the load balancer, to communicate with all other pods in the cluster and vice versa.

- The ovs-networkpolicy plug-in allows project administrators to configure their own isolation policies using NetworkPolicy objects.

OpenShift Routing and Plug-Ins

An OpenShift administrator can deploy routers in an OpenShift cluster, which enable routes created by developers to be used by external clients. The routing layer in OpenShift is pluggable, and two available router plug-ins are provided and supported by default.

OpenShift routers provide external host name mapping and load balancing to services over protocols that pass distinguishing information directly to the router; the host name must be present in the protocol in order for the router to determine where to send it.

Router plug-ins assume they can bind to host ports 80 and 443. This is to allow external traffic to route to the host and subsequently through the router. Routers also assume that networking is configured such that it can access all pods in the cluster.

The OpenShift router is the ingress point for all external traffic destined for services in your OpenShift installation.OpenShift provides and supports the following router plug-ins:

- The HAProxy template router is the default plug-in. It uses the openshift3/ose-haproxy-routerimage to run an HAProxy instance alongside the template router plug-in inside a container on OpenShift. It currently supports HTTP(S) traffic and TLS-enabled traffic via SNI. The router’s container listens on the host network interface, unlike most containers that listen only on private IPs. The router proxies external requests for route names to the IPs of actual pods identified by the service associated with the route.

- The Citrix ingress controller can be deployed as a router plug-in in the OpenShift cluster to integrate with Citrix ADCs deployed in your environment. The Citrix ingress controller enables you to use the advanced load balancing and traffic management capabilities of Citrix ADC with your OpenShift cluster. See Deploy the Citrix ingress controller as a router plug-in in an OpenShift cluster.

OpenShift Routes and Ingress Methods

In an OpenShift cluster, external clients need a way to access the services provided by pods.OpenShift provides two resources for communicating with services running in the cluster: routes and Ingress.

Routes

In an OpenShift cluster, a route exposes a service on a given domain name or associates a domain name with a service. OpenShift routers route external requests to services inside the OpenShift cluster according to the rules specified in routes. When you use the OpenShift router, you must also configure the external DNS to make sure that the traffic is landing on the router.

The Citrix ingress controller can be deployed as a router plug-in in the OpenShift cluster to integrate with Citrix ADCs deployed in your environment. The Citrix ingress controller enables you to use the advanced load balancing and traffic management capabilities of Citrix ADC with your OpenShift cluster. OpenShift routes can be secured or unsecured. Secured routes specify the TLS termination of the route.

The Citrix ingress controller supports the following OpenShift routes:

- Unsecured Routes: For Unsecured routes, HTTP traffic is not encrypted.

- Edge Termination: For edge termination, TLS is terminated at the router. Traffic from the router to the endpoints over the internal network is not encrypted.

- Passthrough Termination: With passthrough termination, the router is not involved in TLS offloading and encrypted traffic is sent straight to the destination.

- Re-encryption Termination: In re-encryption termination, the router terminates the TLS connection but then establishes another TLS connection to the endpoint.

Based on how you want to use Citrix ADC, there are two ways to deploy the Citrix Ingress Controller as a router plug-in in the OpenShift cluster: as a Citrix ADC CPX within the cluster or as a Citrix ADC MPX/VPX outside the cluster.

Deploy Citrix ADC CPX as a router within the OpenShift cluster

The Citrix Ingress controller is deployed as a sidecar alongside the Citrix ADC CPX container in the same pod. In this mode, the Citrix ingress controller configures the Citrix ADC CPX. See Deploy Citrix ADC CPX as a router within the OpenShift cluster.

Deploy Citrix ADC MPX/VPX as a router outside the OpenShift cluster

The Citrix ingress controller is deployed as a stand-alone pod and allows you to control the Citrix ADC MPX, or VPX appliance from outside the OpenShift cluster. See Deploy Citrix ADC MPX/VPX as a router outside the OpenShift cluster.

Ingress

Kubernetes Ingress provides you a way to route requests to services based on the request host or path, centralizing a number of services into a single entry point.

The Citrix Ingress Controller is built around Kubernetes Ingress, automatically configuring one or more Citrix ADC appliances based on the Ingress resource.

Routing with Ingress can be done by:

- Host name based routing

- Path based routing

- Wildcard based routing

- Exact path matching

- Non-Hostname routing

- Default back end

See Ingress configurations for examples and more information.

Deploy the Citrix ingress controller as an OpenShift router plug-in

Based on how you want to use Citrix ADC, there are two ways to deploy the Citrix Ingress Controller as a router plug-in in the OpenShift cluster:

- As a sidecar container alongside Citrix ADC CPX in the same pod: In this mode, the Citrix ingress controller configures the Citrix ADC CPX. See Deploy Citrix ADC CPX as a router within the OpenShift cluster.

- As a standalone pod in the OpenShift cluster: In this mode, you can control the Citrix ADC MPX or VPX appliance deployed outside the cluster. See Deploy Citrix ADC MPX/VPX as a router outside the OpenShift cluster.

Recommended Architectures

We recommend the following Architectures for customers when designing their microservices architectures:

- Citrix Unified Ingress

- Citrix 2-Tier Ingress

- Citrix Service Mesh Lite

Figure 1-2: The architecture ranges from relatively simple to more complex and feature‑rich.

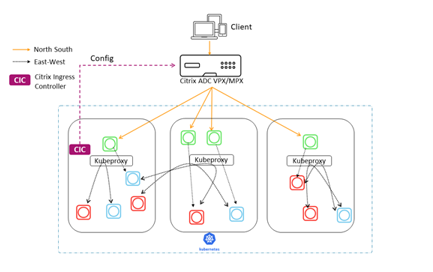

Citrix Unified Ingress

In a Unified Ingress deployment, Citrix ADC MPX or VPX devices proxy North-South traffic from the clients to the enterprise-grade applications deployed as microservices inside the cluster. The Citrix ingress controller is deployed as a pod or sidecar in the Kubernetes cluster to automate the configuration of Citrix ADC devices (MPX or VPX) based on the changes to the microservices or the Ingress resources.

You can begin implementing the Unified Ingress Model while your application is still a monolith. Simply position the Citrix ADC as a reverse proxy in front of your application server and implement the features described later. You are then in a good position to convert your application to microservices.

Communication between the microservices is handled through a mechanism of your choice (kube-proxy, IPVS, and so on).

Functionality provided in different categories

The capabilities of the Unified Ingress architecture fall into three groups.

The features in the first group optimize performance:

- Load balancing

- Low‑latency connectivity

- High availability

The features in the second group improve security and make application management easier:

- Rate limiting

- SSL/TLS termination

- HTTP/2 support

- Health checks

The features in the final group are specific to microservices:

- Central communications point for services

- API gateway capability

Summary

Features with the Unified Ingress Model include robust load-balancing to services, a central communication point, Dynamic Service Discovery, low latency connectivity, High Availability, rate limiting, SSL/TLS termination, HTTP/2, and more.

The Unified Ingress model makes it easy to manage traffic, load balance requests, and dynamically respond to changes in the back end microservices application.

Advantages include:

- North-South traffic flows are well-scalable, visible for observation and monitoring, and provide continuous delivery with tools such as Spinnaker and Citrix ADM

- A single tier unifies the infrastructure team who manages the network and platform services, and reduces hops to lower latency

- Suitable for internal applications that do not need Web App Firewall and SSL Offload, but can be added later

Disadvantages include:

- No East-West security with kube-proxy, but can add Calico for L4 Segmentation

- Kube-proxy scalability is unknown

- There is limited to no visibility of East-West traffic since kube-proxy does not give visibility, control, or logs, mitigating open tool integration and continuous delivery

- The platform team must also be network savvy

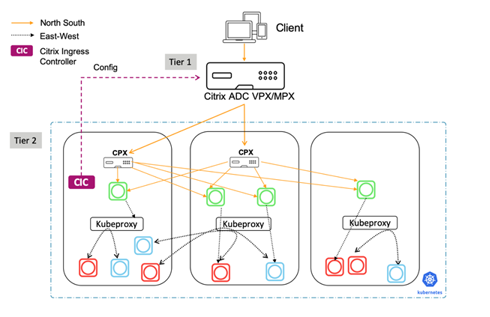

Citrix 2-Tier Ingress

The 2-Tier Ingress architectural model is a great solution for Cloud Native Novices. In this model, the Citrix ADC in tier 1 manages incoming traffic, but sends requests to the 2-tier ADC managed by developers rather than directly to the service instances. The Tier-2 Ingress model applies policies, written by the Platform and Developers team, to inbound traffic only, and enables Cloud scale and multitenancy.

Figure 1-4: Diagram of the Citrix 2-Tier Ingress Model with a tier-1 Citrix ADC VPX/MPX and tier-2 Citrix ADC CPX containers.

Functionality provided by Tier-1

The first tier ADC, managed by the traditional Networking team, provides L4 load balancing, Citrix Web App Firewall, SSL Offload, and reverse proxy services. The Citrix ADC MPX or VPX devices in Tier-1 proxy the traffic (North-South) from the client to Citrix ADC CPXs in Tier-2.

By default the Citrix Ingress Controller will program the following configurations on Tier-1:

- Reverse proxy of applications to users:

- Content Switching virtual servers

- Virtual server (front-end, user-facing)

- Service Groups

- SSL Offload

- NetScaler Logging / Debugging

- Health monitoring of services

Functionality provided by Tier-2

While the first tier ADC provides reverse proxy services, the second tier ADC, managed by the Platform team, serves as a communication point to microservices, providing:

- Dynamic service discovery

- Load balancing

- Visibility and rich metrics

The Tier-2 Citrix ADC CPX then routes the traffic to the microservices in the Kubernetes cluster. The Citrix ingress controller deployed as a standalone pod configures the Tier-1 devices. And, the sidecar controller in one or more Citrix ADC CPX pods configures the associated Citrix ADC CPX in the same pod.

Summary

The networking architecture for microservices in the 2-tier model uses two ADCs configured for different roles. The Tier-1 ADC acts as a user-facing proxy server and the Tier-2 ADC as a proxy for the microservices.

Splitting different types of functions between two different tiers provides speed, control, and opportunities to optimize for security. In the second tier, load balancing is fast, robust, and configurable.

With this model, there is a clear separation between the ADC administrator and the Developer. It is BYOL for Developers.

Advantages include:

- North-South traffic flows are well-scalable, visible for observation and monitoring, and provide continuous delivery with tools such as Spinnaker and Citrix ADM

- Simplest and faster deployment for a Cloud Native Novice with limited new learning for Network and Platform teams

Disadvantages include:

- No East-West security with kube-proxy, but can add Calico for L4 Segmentation

- Kube-proxy scalability is unknown

- There is limited to no visibility of East-West traffic since kube-proxy does not give visibility, control, or logs, mitigating open tool integration and continuous delivery.

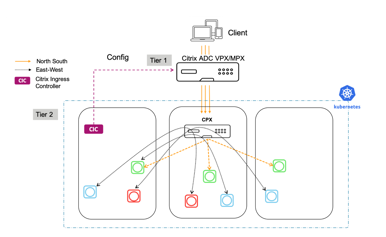

Citrix Service Mesh Lite

The Service Mesh Lite is the most feature rich of the three models. It is internally secure, fast, efficient, and resilient, and it can be used to enforce policies for inbound and inter-container traffic.

The Service Mesh Lite model is suitable for several use cases, which include:

- Health and finance apps – Regulatory and user requirements mandate a combination of security and speed for financial and health apps, with billions of dollars in financial and reputational value at stake.

- Ecommerce apps – User trust is a huge issue for ecommerce and speed is a key competitive differentiator. So, combining speed and security is crucial.

Summary

Advantages include:

- A more robust approach to networking, with a load balancer CPX applies policies to inbound and inter-container traffic, deploying full L7 policies

- Richer observability, analytics, continuous delivery and security for North-South and East-West traffic

- Canary for each container with an embedded Citrix ADC

- A single tier unifies the infrastructure team who manages the network and platform services, and reduces hops to lower latency

Disadvantages include:

- More complex model to deploy

- Platform team must be network savvy

Summary of Architecture Choices

Citrix Unified Ingress

-

North-South (NS) Application Traffic - one Citrix ADC is responsible for L4 and L7 NS traffic, security, and external load balancing outside of the K8s cluster.

-

East-West (EW) Application Traffic - kube-proxy is responsible for L4 EW traffic.

-

Security - the ADC is responsible for securing NS traffic and authenticating users. Kube-proxy is responsible for L4 EW traffic.

-

Scalability and Performance - NS traffic is well-scalable, and clustering is an option.EW traffic and kube-proxy scalability is unknown.

-

Observability - the ADC provides excellent observability for NS traffic, but there is no observability for EW traffic.

Citrix 2-Tier Ingress

-

North-South (NS) Application Traffic - the Tier-1 ADC is responsible for SSL offload, Web App Firewall, and L4 NS traffic. It is used for both monolith and CN applications. The Tier-2 CPX Manages rapid change of k8s and L7 NS traffic.

-

East-West (EW) Application Traffic - kube-proxy is responsible for L4 EW traffic.

-

Security - the Tier-1 ADC is responsible for securing NS traffic. Authentication can occur at either ADC. EW traffic is not secured with kube-proxy.Add Calico for L4 Segmentation.

-

Scalability and Performance - NS traffic is well-scalable, and clustering is an option.EW traffic and kube-proxy scalability is unknown.

-

Observability - the Tier-1 ADC provides excellent observability for NS traffic, but there is no observability for EW traffic.

Citrix Service Mesh Lite

-

North-South (NS) Application Traffic - the Tier-1 ADC is responsible for SSL offload, Web App Firewall, and L4 NS traffic. It is used for both monolith and CN applications. The Tier-2 CPX Manages rapid change of k8s and L7 NS traffic.

-

East-West (EW) Application Traffic - the Tier-2 CPX or any open source proxy is responsible for L4 EW traffic. Customers can select which applications use the CPX and which use kube-proxy.

-

Security - the Tier-1 ADC is responsible for securing NS traffic. Authentication can occur at either ADC. The Citrix CPX is responsible for authentication, SSL Offload, and securing EW traffic. Encryption can be applied at the application level.

-

Scalability and Performance - NS and EW traffic is well-scalable, but it adds 1 in-line hop.

-

Observability - the Tier-1 ADC provides excellent observability of NS traffic. The CPX in Tier-2 provides observability of EW traffic, but it can be disabled to reduce the CPX memory or CPU footprint.

How to Deploy

Citrix Unified Ingress

To validate a Citrix Unified Ingress deployment with OpenShift, use a sample “hello-world” application with a Citrix ADC VPX or MPX. The default namespace for OpenShift, “default”, is used for this deployment.

-

A Citrix ADC Instance is hand built and configured with a NSIP/SNIP.Installing Citrix ADC on XenServer can be found here.

-

Copy the following YAML file example into an OpenShift directory and name it application.yaml.

apiVersion: apps/v1 kind: Deployment metadata: name: hello-world spec: selector: matchLabels: run: load-balancer-example replicas: 2 template: metadata: labels: run: load-balancer-example spec: containers: - name: hello-world image: gcr.io/google-samples/node-hello:1.0 ports: - containerPort: 8080 protocol: TCP <!--NeedCopy--> -

Deploy the application.

oc apply -f application.yaml -

Ensure pods are running.

oc get pods -

Copy the following YAML file example into an OpenShift directory and name it service.yaml.

apiVersion: v1 kind: Service metadata: name: hello-world-service spec: type: NodePort ports: - port: 80 targetPort: 8080 selector: run: load-balancer-example <!--NeedCopy--> -

Expose the application via NodePort with a service.

oc apply -f service.yaml -

Verify that the service was created.

oc get service -

Copy the following YAML file example into an OpenShift directory and name it ingress.yaml.You must change the annotation “ingress.citrix.com/frontend-ip” to a free IP address to be made the VIP on the Citrix ADC.

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: hello-world-ingress annotations: kubernetes.io/ingress.class: "vpx" ingress.citrix.com/insecure-termination: "redirect" ingress.citrix.com/frontend-ip: "10.217.101.183" spec: rules: - host: helloworld.com http: paths: - path: backend: serviceName: hello-world-service servicePort: 80 <!--NeedCopy--> -

Deploy the Ingress YAML file.

oc apply -f ingress.yaml -

Now there are application pods which we have exposed using a service, and can route traffic to them using Ingress. Install the Citrix Ingress Controller (CIC) to push these configurations to our Tier 1 ADC VPX.Before deploying the CIC, deploy an RBAC file that gives the CIC the correct permissions to run.

Note:

The rbac yaml file specifies the namespace and it will have to be changed, pending which namespace is being used.

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: cpx rules: - apiGroups: [""] resources: ["services", "endpoints", "ingresses", "pods", "secrets", "nodes", "routes", "routes/status", "tokenreviews", "subjectaccessreviews"] verbs: ["*"] - apiGroups: ["extensions"] resources: ["ingresses", "ingresses/status"] verbs: ["*"] - apiGroups: ["citrix.com"] resources: ["rewritepolicies"] verbs: ["*"] - apiGroups: ["apps"] resources: ["deployments"] verbs: ["*"] <!--NeedCopy-->kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: cpx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cpx subjects: - kind: ServiceAccount name: cpx namespace: default <!--NeedCopy-->apiVersion: v1 kind: ServiceAccount metadata: name: cpx namespace: default <!--NeedCopy--> -

Deploy the RBAC file.

oc apply -f rbac.yaml -

Before deploying the CIC, edit the YAML file. Under spec, add either the NSIP or the SNIP as long as management is enabled on the SNIP, of the Tier 1 ADC. Notice the argument “ingress-classes” is the same as the ingress class annotation specified in the Ingress YAML file.

apiVersion: v1 kind: Pod metadata: name: hello-world-cic labels: app: hello-world-cic spec: serviceAccountName: cpx containers: - name: hello-world-cic image: "quay.io/citrix/citrix-k8s-ingress-controller:1.1.3" env: # Set NetScaler NSIP/SNIP, SNIP in case of HA (mgmt has to be enabled) - name: "NS_IP" value: "10.217.101.193" # Set username for Nitro # Set log level - name: "NS_ENABLE_MONITORING" value: "NO" - name: "NS_USER" value: "nsroot" - name: "NS_PASSWORD" value: "nsroot" - name: "EULA" value: "yes" - name: "LOGLEVEL" value: "DEBUG" args: - --ingress-classes vpx - --feature-node-watch false imagePullPolicy: IfNotPresent <!--NeedCopy--> -

Deploy the CIC.

oc apply -f cic.yaml -

Verify all pods are running.

oc get pods -

Edit the hosts file on your local machine with an entry for helloworld.com and the VIP on the Citrix ADC specified in the Ingress YAML file.

-

Navigate to helloworld.com in a browser.”Hello Kubernetes!” should appear.

Note: The following are delete commands

oc delete pods (pod name) -n (namespace name)oc delete deployment (deployment name) -n (namespace name)oc delete service (service name) -n (namespace name)oc delete ingress (ingress name) -n (namespace name)oc delete serviceaccounts (serviceaccounts name) -n (namespace name)

Citrix 2-Tier Ingress

To validate a Citrix 2-Tier Ingress deployment with OpenShift, use a sample “hello-world” application with a Citrix ADC VPX or MPX. The default namespace “tier-2-adc”, is used for this deployment.**Note: When deploying pods, services, and Ingress, the namespace must be specified using the parameter “-n (namespace name)”.

-

A Citrix ADC Instance is hand built and configured with a NSIP/SNIP.Installing Citrix ADC on XenServer can be found [here. If the instance was already configured, clear any virtual servers in Load Balancing or Content Switching that were pushed to the ADC from deploying hello-world as a Unified Ingress.

-

Create a namespace called “tier-2-adc”.

oc create namespace tier-2-adc -

Copy the following YAML file example into an OpenShift directory and name it

application-2t.yaml.apiVersion: apps/v1 kind: Deployment metadata: name: hello-world spec: selector: matchLabels: run: load-balancer-example replicas: 2 template: metadata: labels: run: load-balancer-example spec: containers: - name: hello-world image: gcr.io/google-samples/node-hello:1.0 ports: - containerPort: 8080 protocol: TCP <!--NeedCopy--> -

Deploy the application in the namespace.

oc apply -f application-2t.yaml -n tier-2-adc -

Ensure pods are running.

oc get pods -

Copy the following YAML file example into an OpenShift directory and name it

service-2t.yaml.apiVersion: v1 kind: Service metadata: name: hello-world-service-2 spec: type: NodePort ports: -port: 80 targetPort: 8080 selector: run: load-balancer-example <!--NeedCopy--> -

Expose the application via NodePort with a service.

oc apply -f service-2t.yaml -n tier-2-adc -

Verify that the service was created.

oc get service -n tier-2-adc -

Copy the following YAML file example into an OpenShift directory and name it

ingress-2t.yaml.apiVersion: extensions/v1beta1 kind: Ingress metadata: name: hello-world-ingress-2 annotations: kubernetes.io/ingress.class: "cpx" spec: backend: serviceName: hello-world-service-2 servicePort: 80 <!--NeedCopy--> -

Deploy the Ingress YAML file.

oc apply -f ingress-2t.yaml -n tier-2-adc -

Deploy an RBAC file that gives the CIC and CPX the correct permissions to run.

Note:

The rbac yaml file specifies the namespace and it will have to be changed, pending which namespace is being used.

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: cpx rules: -apiGroups: [""] resources: ["services", "endpoints", "ingresses", "pods", "secrets", "nodes", "routes", "routes/status", "tokenreviews", "subjectaccessreviews"] verbs: ["*"] -apiGroups: ["extensions"] resources: ["ingresses", "ingresses/status"] verbs: ["*"] -apiGroups: ["citrix.com"] resources: ["rewritepolicies"] verbs: ["*"] -apiGroups: ["apps"] resources: ["deployments"] verbs: ["*"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: cpx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cpx subjects: - kind: ServiceAccount name: cpx namespace: tier-2-adc --- apiVersion: v1 kind: ServiceAccount metadata: name: cpx namespace: tier-2-adc <!--NeedCopy--> -

Deploy the RBAC file.

oc apply -f rbac-2t.yaml -

The service account needs elevated permissions to create a CPX.

oc adm policy add-scc-to-user privileged system:serviceaccount:tier-2-adc:cpx -

Edit the CPX YAML file and call it

cpx-2t.yaml. This deploys the CPX and the service that exposes it. Notice the argument for Ingress Class matches the annotation in theingress-2t.yamlfile.apiVersion: extensions/v1beta1 kind: Deployment metadata: name: hello-world-cpx-2 spec: replicas: 1 template: metadata: name: hello-world-cpx-2 labels: app: hello-world-cpx-2 app1: exporter annotations: NETSCALER_AS_APP: "True" spec: serviceAccountName: cpx containers: - name: hello-world-cpx-2 image: "quay.io/citrix/citrix-k8s-cpx-ingress:13.0-36.28" securityContext: privileged: true env: - name: "EULA" value: "yes" - name: "KUBERNETES_TASK_ID" value: "" imagePullPolicy: Always # Add cic as a sidecar - name: cic image: "quay.io/citrix/citrix-k8s-ingress-controller:1.1.3" env: - name: "EULA" value: "yes" - name: "NS_IP" value: "127.0.0.1" - name: "NS_PROTOCOL" value: "HTTP" - name: "NS_PORT" value: "80" - name: "NS_DEPLOYMENT_MODE" value: "SIDECAR" - name: "NS_ENABLE_MONITORING" value: "YES" - name: POD_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace args: - --ingress-classes cpx imagePullPolicy: Always apiVersion: v1 kind: Service metadata: name: lb-service-cpx labels: app: lb-service-cpx spec: type: NodePort ports: - port: 80 protocol: TCP name: http targetPort: 80 selector: app: hello-world-cpx-2 <!--NeedCopy--> -

Deploy the CPX.

oc apply -f cpx-2t.yaml -n tier-2-adc -

Verify the pod is running and the service was created.

oc get pods -n tier-2-adcoc get service -n tier-2-adc -

Create an Ingress to route from the VPX to the CPX. The front-end IP should be a free IP on the ADC. Give the file a name:

ingress-cpx-2t.yaml.apiVersion: extensions/v1beta1 kind: Ingress metadata: name: hello-world-ingress-vpx-2 annotations: kubernetes.io/ingress.class: "helloworld" ingress.citrix.com/insecure-termination: "redirect" ingress.citrix.com/frontend-ip: "10.217.101.183" spec: rules: - host: helloworld.com http: paths: - path: backend: serviceName: lb-service-cpx servicePort: 80 <!--NeedCopy--> -

Deploy the Ingress.

oc apply -f ingress-cpx-2t.yaml -n tier-2-adc -

Before deploying the CIC, edit the YAML file. Under spec, add either the NSIP or the SNIP as long as management is enabled on the SNIP, of the Tier 1 ADC.

apiVersion: v1 kind: Pod metadata: name: hello-world-cic labels: app: hello-world-cic spec: serviceAccountName: cpx containers: - name: hello-world-cic image: "quay.io/citrix/citrix-k8s-ingress-controller:1.1.3" env: # Set NetScaler NSIP/SNIP, SNIP in case of HA (mgmt has to be enabled) - name: "NS_IP" value: "10.217.101.176" # Set username for Nitro # Set log level - name: "NS_ENABLE_MONITORING" value: "NO" - name: "NS_USER" value: "nsroot" - name: "NS_PASSWORD" value: "nsroot" - name: "EULA" value: "yes" - name: "LOGLEVEL" value: "DEBUG" args: - --ingress-classes helloworld - --feature-node-watch false imagePullPolicy: IfNotPresent <!--NeedCopy--> -

Deploy the CIC.

oc apply -f cic-2t.yaml -n tier-2-adc -

Verify all pods are running.

oc get pods -n tier-2-adc -

Edit the hosts file on your local machine with an entry for helloworld.com and the VIP on the Citrix ADC specified in the Ingress YAML file that routes from the VPX to the CPX.

-

Navigate to helloworld.com in a browser.”Hello Kubernetes!” should appear.

Citrix Service Mesh Lite

Service Mesh Lite allows the introduction of CPX (or other Citrix ADC appliances) as a replacement for the built-in HAProxy functionalities. This enables us to expand upon our N/S capabilities in Kubernetes and provide E/W traffic load balancing, routing, and observability as well. Citrix ADC (MPX, VPX, or CPX) can provide such benefits for E-W traffic such as:

- Mutual TLS or SSL offload

- Content based routing to allow or block traffic based on HTTP or HTTPS header parameters

- Advanced load balancing algorithms (for example, least connections, least response time and so on.)

- Observability of east-west traffic through measuring golden signals (errors, latencies, saturation, or traffic volume).Citrix ADM’s Service Graph is an observability solution to monitor and debug microservices.

- In this deployment scenario we deploy the Bookinfo application and observe how it functions by default. Then we go through to rip and replace the default Kubernetes services and use CPX and VPX to proxy our E/W traffic.

Citrix Service Mesh Lite with a CPX

To validate a Citrix Unified Ingress deployment with OpenShift, use a sample “hello-world” application with a Citrix ADC VPX or MPX. The default namespace for OpenShift, “default,” is used for this deployment.

-

A Citrix ADC Instance is hand built and configured with a NSIP/SNIP.Installing Citrix ADC on XenServer can be found here.

-

Create a namespace for this deployment. In this example,

smlis used.oc create namespace sml -

Copy the following YAML to create the deployment and services for Bookinfo. Name it bookinfo.yaml.

##################################################################################################

# Details service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: details

labels:

app: details

service: details

spec:

ports:

- port: 9080

name: http

selector:

app: details

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: details-v1

labels:

app: details

version: v1

spec:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

app: details

version: v1

spec:

containers:

- name: details

image: docker.io/maistra/examples-bookinfo-details-v1:0.12.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

---

##################################################################################################

# Ratings service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: ratings

labels:

app: ratings

service: ratings

spec:

ports:

- port: 9080

name: http

selector:

app: ratings

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: ratings-v1

labels:

app: ratings

version: v1

spec:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

app: ratings

version: v1

spec:

containers:

- name: ratings

image: docker.io/maistra/examples-bookinfo-ratings-v1:0.12.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

---

##################################################################################################

# Reviews service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: reviews

labels:

app: reviews

service: reviews

spec:

ports:

- port: 9080

name: http

selector:

app: reviews

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: reviews-v1

labels:

app: reviews

version: v1

spec:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

app: reviews

version: v1

spec:

containers:

- name: reviews

image: docker.io/maistra/examples-bookinfo-reviews-v1:0.12.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: reviews-v2

labels:

app: reviews

version: v2

spec:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

app: reviews

version: v2

spec:

containers:

- name: reviews

image: docker.io/maistra/examples-bookinfo-reviews-v2:0.12.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: reviews-v3

labels:

app: reviews

version: v3

spec:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

app: reviews

version: v3

spec:

containers:

- name: reviews

image: docker.io/maistra/examples-bookinfo-reviews-v3:0.12.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

---

##################################################################################################

# Productpage services

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: productpage-service

spec:

type: NodePort

ports:

- port: 80

targetPort: 9080

selector:

app: productpage

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: productpage-v1

labels:

app: productpage

version: v1

spec:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

labels:

app: productpage

version: v1

spec:

containers:

- name: productpage

image: docker.io/maistra/examples-bookinfo-productpage-v1:0.12.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

---

<!--NeedCopy-->

-

Deploy the bookinfo.yaml in the sml namespace.

oc apply -f bookinfo.yaml -n sml -

Copy and deploy the Ingress file that maps to the product page service. This file can be named

ingress-productpage.yaml.The front-end IP should be a free VIP on the Citrix ADC VPX/MPX.apiVersion: extensions/v1beta1 kind: Ingress metadata: name: productpage-ingress annotations: kubernetes.io/ingress.class: "bookinfo" ingress.citrix.com/insecure-termination: "redirect" ingress.citrix.com/frontend-ip: "10.217.101.182" spec: rules: - host: bookinfo.com http: paths: - path: backend: serviceName: productpage-service servicePort: 80 <!--NeedCopy-->

oc apply -f ingress-productpage.yaml -n sml

-

Copy the following YAML for the RBAC file in the sml namespace and deploy it. Name the file

rbac-cic-pp.yamlas it is used for the CIC in front of the product page microservice.kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: cpx rules: - apiGroups: [""] resources: ["services", "endpoints", "ingresses", "pods", "secrets", "routes", "routes/status", "nodes", "namespaces"] verbs: ["*"] - apiGroups: ["extensions"] resources: ["ingresses", "ingresses/status"] verbs: ["*"] - apiGroups: ["citrix.com"] resources: ["rewritepolicies", "vips"] verbs: ["*"] - apiGroups: ["apps"] resources: ["deployments"] verbs: ["*"] - apiGroups: ["apiextensions.k8s.io"] resources: ["customresourcedefinitions"] verbs: ["get", "list", "watch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: cpx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cpx subjects: - kind: ServiceAccount name: cpx namespace: sml apiVersion: rbac.authorization.k8s.io/v1 --- apiVersion: v1 kind: ServiceAccount metadata: name: cpx namespace: sml <!--NeedCopy-->

oc apply -f rbac-cic-pp.yaml -n sml

-

Elevate the service account privileges to deploy the CIC and CPX.

oc adm policy add-scc-to-user privileged system:serviceaccount:sml:cpx -

Edit the hosts file on the local machine with bookinfo.com mapped to the front end IP specified in the

ingress-productpage.yaml. -

Copy and deploy the product page with a CIC. Name the file

cic-productpage.yaml.The NS_IP should be the NS_IP of the Tier 1 ADC.apiVersion: v1 kind: Pod metadata: name: productpage-cic labels: app: productpage-cic spec: serviceAccountName: cpx containers: - name: productpage-cic image: "quay.io/citrix/citrix-k8s-ingress-controller:1.1.3" env: # Set NetScaler NSIP/SNIP, SNIP in case of HA (mgmt has to be enabled) - name: "NS_IP" value: "10.217.101.176" # Set username for Nitro # Set log level - name: "NS_ENABLE_MONITORING" value: "NO" - name: "NS_USER" value: "nsroot" - name: "NS_PASSWORD" value: "nsroot" - name: "EULA" value: "yes" - name: "LOGLEVEL" value: "DEBUG" - name: "NS_APPS_NAME_PREFIX" value: "BI-" args: - --ingress-classes bookinfo - --feature-node-watch false imagePullPolicy: IfNotPresent <!--NeedCopy-->

oc apply -f cic-productpage.yaml -n sml

-

Navigate to bookinfo.com and click normal user. The product page should pull details, reviews, and ratings, which are other microservices. HAProxy is responsible for routing the traffic between microservices (East-West).

-

Delete the service in front of details. Refresh the Bookinfo webpage and notice that the product page could not pull the microservice for details.

oc delete service details -n sml -

Copy and deploy a headless service so that traffic coming from the product page to details goes through a CPX. Call this file detailsheadless.yaml.

apiVersion: v1 kind: Service metadata: name: details spec: ports: - port: 9080 name: http selector: app: cpx <!--NeedCopy-->

oc apply -f detailsheadless.yaml -n sml

-

Copy and deploy a new details service, that should be names detailsservice.yaml, to sit in front of the details microservice.

apiVersion: v1 kind: Service metadata: name: details-service labels: app: details-service service: details-service spec: clusterIP: None ports: - port: 9080 name: http selector: app: details <!--NeedCopy-->

oc apply -f detailsservice.yaml -n sml

-

Expose the details-service with an ingress and deploy it. Call this file detailsingress.yaml.

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: details-ingress annotations: kubernetes.io/ingress.class: "cpx" ingress.citrix.com/insecure-port: "9080" spec: rules: - host: details http: paths: - path: backend: serviceName: details-service servicePort: 9080 <!--NeedCopy-->

oc apply -f detailsingress.yaml -n sml

-

Copy and deploy the CPXEastWest.yaml file.

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: cpx labels: app: cpx service: cpx spec: replicas: 1 template: metadata: name: cpx labels: app: cpx service: cpx annotations: NETSCALER_AS_APP: "True" spec: serviceAccountName: cpx containers: - name: reviews-cpx image: "quay.io/citrix/citrix-k8s-cpx-ingress:13.0-36.28" securityContext: privileged: true env: - name: "EULA" value: "yes" - name: "KUBERNETES_TASK_ID" value: "" - name: "MGMT_HTTP_PORT" value: "9081" ports: - name: http containerPort: 9080 - name: https containerPort: 443 - name: nitro-http containerPort: 9081 - name: nitro-https containerPort: 9443 imagePullPolicy: Always # Add cic as a sidecar - name: cic image: "quay.io/citrix/citrix-k8s-ingress-controller:1.2.0" env: - name: "EULA" value: "yes" - name: "NS_IP" value: "127.0.0.1" - name: "NS_PROTOCOL" value: "HTTP" - name: "NS_PORT" value: "80" - name: "NS_DEPLOYMENT_MODE" value: "SIDECAR" - name: "NS_ENABLE_MONITORING" value: "YES" - name: POD_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace args: - --ingress-classes cpx imagePullPolicy: Always <!--NeedCopy-->

oc apply -f CPXEastWest.yaml -n sml

- Refresh bookinfo.com and the details should be pulled from the details microservice.A CPX was successfully deployed to proxy EW traffic.

Citrix Service Mesh Lite with a VPX/MPX

-

Run the following commands to delete the CPX being used as an EW proxy. New file is deployed to configure the VPX as the EW proxy between product page and the details microservices.

oc delete -f detailsheadless.yaml -n smloc delete -f detailsservice.yaml -n smloc delete -f detailsingress.yaml -n smloc delete -f CPXEastWest.yaml -n sml -

Copy and deploy a service, name the file detailstoVPX.yaml, to send traffic from the product page back to the VPX. The IP parameter should be a free VIP on the Citrix ADC VPX/MPX.

--- kind: "Service" apiVersion: "v1" metadata: name: "details" spec: ports: - name: "details" protocol: "TCP" port: 9080 --- kind: "Endpoints" apiVersion: "v1" metadata: name: "details" subsets: - addresses: - ip: "10.217.101.182" # Ingress IP in MPX ports: - port: 9080 name: "details" <!--NeedCopy-->

oc apply -f detailstoVPX.yaml -n sml

-

Redeploy the detailsservice.yaml in front of the details microservice.

oc apply -f detailsservice.yaml -n sml -

Copy and deploy the Ingress to expose the details microservice to the VPX. This is named

detailsVPXingress.yaml. The front end IP should match the VIP on the Tier 1 ADC.apiVersion: extensions/v1beta1 kind: Ingress metadata: name: details-ingress annotations: kubernetes.io/ingress.class: "vpx" ingress.citrix.com/insecure-port: "9080" ingress.citrix.com/frontend-ip: "10.217.101.182" spec: rules: - host: details http: paths: - path: backend: serviceName: details-service servicePort: 9080 <!--NeedCopy-->

oc apply -f detailsVPXingress.yaml

- Refresh bookinfo.com and the details should be pulled from the details microservice. A VPX was successfully deployed to proxy EW traffic.

Service Migration to Citrix ADC using Routes or Ingress Classes in Openshift

Service Migration with Route Sharding

The Citrix Ingress Controller (CIC) acts as a Router and redirects the traffic to various pods to distribute incoming traffic among various available pods.

This migration process can also be part of a cluster upgrade process from legacy Openshift topologies to automated deployments with Citrix CNC, CIC and CPX components for cluster migration and upgrade procedures.

This solution can be acheived by two methods:

- CIC Router by Plug-In (Pod)

- CPX Router inside Openshift (Sidecar)

Both of the methods are described below along with migration examples.

Static Routes (Default) -maps the Openshift HostSubnet to the External ADC via a Static route.

Static routes are common in legacy Openshift deployments using HAProxy. The static routes can be used in parralell with Citrix CNC, CIC and CPX when migrating services from one Service Proxy to another without disrupting the deployed namespaces in a functioning cluster.

Example static route configuration for Citrix ADC:

oc get hostsubnet (Openshift Cluster) snippet

oc311-master.example.com 10.x.x.x 10.128.0.0/23

oc311-node1.example.com 10.x.x.x 10.130.0.0/23

oc311-node2.example.com 10.x.x.x 10.129.0.0/23

show route (external Citrix VPX) snippet

10.128.0.0 255.255.254.0 10.x.x.x STATIC

10.129.0.0 255.255.254.0 10.x.x.x STATIC

10.130.0.0 255.255.254.0 10.x.x.x STATIC

Auto Routes - uses the CNC (Citrix Node Controller) to automate the external routes to the defined route shards.

You can integrate Citrix ADC with OpenShift in two ways, both of which support OpenShift router sharding.

Route Types

- unsecured - external load balancer to CIC router, HTTP traffic is not encrypted.

- secured-edge - external load balancer to CIC router terminating TLS.

- secured-passthrough - external load balancer to destination terminating TLS

- secured reencrypt - external load balancer to CIC router terminating TLS. CIC router encrypting to destination usin TLS.

Deployment Example #1: CIC deployed as an OpenShift router plug-in

For Routes, we use Route Sharding concepts. Here, the CIC acts as a Router and redirects the traffic to various pods to distribute incoming traffic among various available pods. The CIC is installed as a router plug-in for Citrix ADC MPX or VPX, deployed outside the cluster.

Citrix Components:

- VPX - The Ingress ADC that is presenting the cluster services to DNS.

- CIC - provides the ROUTE_LABELS and NAMESPACE_LABELS to the external Citrix ADC via the CNC route.

Sample YAML file parameters for route shards:

Citrix Openshift source files are located in Github here

-

Add the following environment variables, ROUTE_LABELS and NAMESPACE_LABELS, with the value in the kubernetes label format. The route sharding expressions in CIC, NAMESPACE_LABELS, is an optional field. If used, it must match match the namespace labels that are mentioned in the route.yaml file.

env: - name: "ROUTE_LABELS" value: "name=apache-web" - name: "NAMESPACE_LABELS" value: "app=hellogalaxy" -

The routes that are created via route.yaml will have labels that match the route sharding expressions in the CIC will get configured.

metadata: name: apache-route namespace: hellogalaxy labels: name: apache-web -

Expose the service with service.yaml.

metadata: name: apache-service spec: type: NodePort #type=LoadBalancer ports: - port: 80 targetPort: 80 selector: app: apache -

Deploy a simple web application with a selector label matching that in the service.yaml.

Deployment Example #2: Citrix ADC CPX deployed as an OpenShift router

Citrix ADC CPX can be deployed as an OpenShift router, along with the Citrix Ingress Controller inside the cluster. For more steps on deploying a CPX or CIC as a Router in the cluster, see Enable OpenShift routing sharding support with Citrix ADC.

Citrix Components:

- VPX - The Ingress ADC that is presenting the cluster services to DNS.

- CIC - provides the ROUTE_LABELS and NAMESPACE_LABELS to the external Citrix ADC to define the route shards.

- CNC - provides the auto-route configuration for shards to the external load balancer.

- CPX - provides Openshift routing within the Openshift cluster.

Service Migration with Ingress Classes Annotation

Ingress Classes Annotations uses the ingress classes annotation concept, we add annotations to Ingress with ingress class information, this will help in redirecting the traffic to a particular pod/node from the external ADC.

Sample YAML file parameters for ingress classes:**

Citrix Ingress source files are located in Github here

env:

args:

- --ingress-classes

vpx

The Ingress config file should also have a kubernetes.io/ingress.class annotations field inside metadata that will be matched with the CIC ingress-classes args field, at the time of creation.

Sample Ingress VPX deployment with the “ingress.classes” example**

kind: Ingress

metadata:

name: ingress-vpx

annotations:

kubernetes.io/ingress.class: "vpx"

Citrix Metrics Exporter

You can use the Citrix ADC metrics exporter and Prometheus-Operator to monitor Citrix ADC VPX or CPX ingress devices and Citrix ADC CPX (east-west) devices. See View metrics of Citrix ADCs using Prometheus and Grafana.

In this article

- Why Citrix for Modern Application Delivery

- A Solution for All Stakeholders

- The Citrix Software Stack Explained

- The Citrix ADC Value

- The Citrix ADC Benefits

- The Citrix ADC Suite Advantage

- Partner Ecosystem

-

OpenShift Overview

- OpenShift Projects

- OpenShift Namespaces

- OpenShift Software Defined Networking (SDN)

- OpenShift Routing and Plug-Ins

- OpenShift Routes and Ingress Methods

- Deploy Citrix ADC CPX as a router within the OpenShift cluster

- Deploy Citrix ADC MPX/VPX as a router outside the OpenShift cluster

- Deploy the Citrix ingress controller as an OpenShift router plug-in

- Recommended Architectures

- How to Deploy

- Service Migration to Citrix ADC using Routes or Ingress Classes in Openshift

- Citrix Metrics Exporter