Prepare host for graphics

This section provides step-by-step instructions on how to prepare XenServer for supported graphical virtualization technologies. The offerings include NVIDIA vGPU, Intel GVT-d, and Intel GVT-g.

NVIDIA vGPU

NVIDIA vGPU enables multiple Virtual Machines (VM) to have simultaneous, direct access to a single physical GPU. It uses NVIDIA graphics drivers deployed on non-virtualized Operating Systems. NVIDIA physical GPUs can support multiple virtual GPU devices (vGPUs). To provide this support, the physical GPU must be under the control of NVIDIA Virtual GPU Manager running in XenServer Control Domain (dom0). The vGPUs can be assigned directly to VMs.

VMs use virtual GPUs like a physical GPU that the hypervisor has passed through. An NVIDIA driver loaded in the VM provides direct access to the GPU for performance critical fast paths. It also provides a paravirtualized interface to the NVIDIA Virtual GPU Manager.

Important:

To ensure that you always have the latest security and functional fixes, ensure that you install the latest NVIDIA vGPU software package for XenServer (consisting of the NVIDIA Virtual GPU Manager for XenServer and NVIDIA drivers) and keep it updated to the latest version provided by NVIDIA. For more information, see the NVIDIA documentation.

The latest NVIDIA drivers are available from the NVIDIA Application Hub.

NVIDIA vGPU is compatible with the HDX 3D Pro feature of Citrix Virtual Apps and Desktops or Citrix DaaS. For more information, see HDX 3D Pro.

Licensing note

NVIDIA vGPU is available for XenServer Premium Edition customers. To learn more about XenServer editions, and to find out how to upgrade, visit the XenServer website. For more information, see Licensing.

Depending on the NVIDIA graphics card used, you might need NVIDIA subscription or a license.

For information on licensing NVIDIA cards, see the NVIDIA website.

Available NVIDIA vGPU types

NVIDIA GRID cards contain multiple Graphics Processing Units (GPU). For example, TESLA M10 cards contain four GM107GL GPUs, and TESLA M60 cards contain two GM204GL GPUs. Each physical GPU can host several different types of virtual GPU (vGPU). vGPU types have a fixed amount of frame buffer, number of supported display heads and maximum resolutions, and are targeted at different classes of workload.

For a list of the most recently supported NVIDIA cards, see the Hardware Compatibility List and the NVIDIA product information.

Note:

The vGPUs hosted on a physical GPU at the same time must all be of the same type. However, there is no corresponding restriction for physical GPUs on the same card. This restriction is automatic and can cause unexpected capacity planning issues.

NVIDIA vGPU system requirements

-

NVIDIA GRID card:

- For a list of the most recently supported NVIDIA cards, see the Hardware Compatibility List and the NVIDIA product information.

-

Depending on the NVIDIA graphics card used, you might need an NVIDIA subscription or a license. For more information, see the NVIDIA product information.

-

Depending on the NVIDIA graphics card, you might need to ensure that the card is set to the correct mode. For more information, see the NVIDIA documentation.

-

XenServer Premium Edition.

-

A host capable of hosting XenServer and the supported NVIDIA cards.

-

NVIDIA vGPU software package for XenServer, consisting of the NVIDIA Virtual GPU Manager for XenServer, and NVIDIA drivers.

Note:

Review the NVIDIA Virtual GPU software documentation available from the NVIDIA website. Register with NVIDIA to access these components.

-

To run Citrix Virtual Desktops with VMs running NVIDIA vGPU, you also need: Citrix Virtual Desktops 7.6 or later, full installation.

-

For NVIDIA Ampere vGPUs and all future generations, you must enable SR-IOV in your system firmware.

vGPU live migration

XenServer enables the use of live migration, storage live migration, and the ability to suspend and resume for NVIDIA vGPU-enabled VMs.

To use the vGPU live migration, storage live migration, or Suspend features, satisfy the following requirements:

-

An NVIDIA GRID card, Maxwell family or later.

-

An NVIDIA Virtual GPU Manager for XenServer with live migration enabled. For more information, see the NVIDIA Documentation.

-

A Windows VM that has NVIDIA live migration-enabled vGPU drivers installed.

vGPU live migration enables the use of live migration within a pool, live migration between pools, storage live migration, and Suspend/Resume of vGPU-enabled VMs.

Preparation overview

-

Install XenServer

-

Install the NVIDIA Virtual GPU Manager for XenServer

-

Restart the XenServer host

Installation on XenServer

XenServer is available for download from the XenServer Downloads page.

Install the following:

-

XenServer Base Installation ISO

-

XenCenter Windows Management Console

For more information, see Install.

Licensing note

vGPU is available for XenServer Premium Edition customers. To learn more about XenServer editions, and to find out how to upgrade, visit the XenServer website. For more information, see Licensing.

Depending on the NVIDIA graphics card used, you might need NVIDIA subscription or a license. For more information, see NVIDIA product information.

For information about licensing NVIDIA cards, see the NVIDIA website.

Install the NVIDIA vGPU Manager for XenServer

Install the NVIDIA Virtual GPU software that is available from NVIDIA. The NVIDIA Virtual GPU software consists of:

-

NVIDIA Virtual GPU Manager

-

Windows Display Driver (The Windows display driver depends on the Windows version)

The NVIDIA Virtual GPU Manager runs in the XenServer Control Domain (dom0). It is provided as either a supplemental pack or an RPM file. For more information about installation, see the NVIDIA virtual GPU Software documentation.

The Update can be installed in one of the following methods:

- Use XenCenter (Tools > Install Update > Select update or supplemental pack from disk)

- Use the xe CLI command

xe-install-supplemental-pack.

Note:

If you are installing the NVIDIA Virtual GPU Manager using an RPM file, ensure that you copy the RPM file to dom0 and then install.

-

Use the rpm command to install the package:

rpm -iv <vgpu_manager_rpm_filename> <!--NeedCopy--> -

Restart the XenServer host:

shutdown -r now <!--NeedCopy--> -

After you restart the XenServer host, verify that the software has been installed and loaded correctly by checking the NVIDIA kernel driver:

[root@xenserver ~]#lsmod |grep nvidia nvidia 8152994 0 <!--NeedCopy--> -

Verify that the NVIDIA kernel driver can successfully communicate with the NVIDIA physical GPUs in your host. Run the

nvidia-smicommand to produce a listing of the GPUs in your platform similar to:[root@xenserver ~]# nvidia-smi Thu Jan 26 13:48:50 2017 +----------------------------------------------------------+| NVIDIA-SMI 367.64 Driver Version: 367.64 | -------------------------------+----------------------+ GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M.| ===============================+======================+======================| | 0 Tesla M60 On | 0000:05:00.0 Off| Off | | N/A 33C P8 24W / 150W | 7249MiB / 8191MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 1 Tesla M60 On | 0000:09:00.0 Off | Off | | N/A 36C P8 24W / 150W | 7249MiB / 8191MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 2 Tesla M60 On | 0000:85:00.0 Off | Off | | N/A 36C P8 23W / 150W | 19MiB / 8191MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 3 Tesla M60 On | 0000:89:00.0 Off | Off | | N/A 37C P8 23W / 150W | 14MiB / 8191MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running compute processes found | +-----------------------------------------------------------------------------+ <!--NeedCopy-->Note:

When using NVIDIA vGPU with XenServer servers that have more than 768 GB of RAM, add the parameter

iommu=dom0-passthroughto the Xen command line:-

Run the following command in the control domain (Dom0):

/opt/xensource/libexec/xen-cmdline –-set-xen iommu=dom0-passthrough -

Restart the host.

-

Intel GVT-d and GVT-g

XenServer supports Intel’s virtual GPU (GVT-g), a graphics acceleration solution that requires no additional hardware. It uses the Intel Iris Pro feature embedded in certain Intel processors, and a standard Intel GPU driver installed within the VM.

To ensure that you always have the latest security and functional fixes, ensure that you install any updates provided by Intel for the drivers on your VMs and the firmware on your host.

Intel GVT-d and GVT-g are compatible with the HDX 3D Pro features of Citrix Virtual Apps and Desktops or Citrix DaaS. For more information, see HDX 3D Pro.

Note:

Because the Intel Iris Pro graphics feature is embedded within the processors, CPU-intensive applications can cause power to be diverted from the GPU. As a result, you might not experience full graphics acceleration as you do for purely GPU-intensive workloads.

Intel GVT-g system requirements and configuration (deprecated)

To use Intel GVT-g, your XenServer host must have the following hardware:

- A CPU that has Iris Pro graphics. This CPU must be listed as supported for Graphics on the Hardware Compatibility List

- A motherboard that has a graphics-enabled chipset. For example, C226 for Xeon E3 v4 CPUs or C236 for Xeon E3 v5 CPUs.

Note:

Ensure that you restart the hosts when switching between Intel GPU pass-through (GVT-d) and Intel Virtual GPU (GVT-g).

When configuring Intel GVT-g, the number of Intel virtual GPUs supported on a specific XenServer host depends on its GPU bar size. The GPU bar size is called the ‘Aperture size’ in the system firmware. We recommend that you set the Aperture size to 1,024 MB to support a maximum of seven virtual GPUs per host.

If you configure the Aperture size to 256 MB, only one VM can start on the host. Setting it to 512 MB can result in only three VMs being started on the XenServer host. An Aperture size higher than 1,024 MB is not supported and does not increase the number of VMs that start on a host.

Enable Intel GPU pass-through

XenServer supports the GPU pass-through feature for Windows VMs using an Intel integrated GPU device.

- For more information on Windows versions supported with Intel GPU pass-through, see Graphics.

- For more information on supported hardware, see the Hardware Compatibility List.

When using Intel GPU on Intel servers, the XenServer server’s Control Domain (dom0) has access to the integrated GPU device. In such cases, the GPU is available for pass-through. To use the Intel GPU Pass-through feature on Intel servers, disable the connection between dom0 and the GPU before passing through the GPU to the VM.

To disable this connection, complete the following steps:

-

On the Resources pane, choose the XenServer host.

-

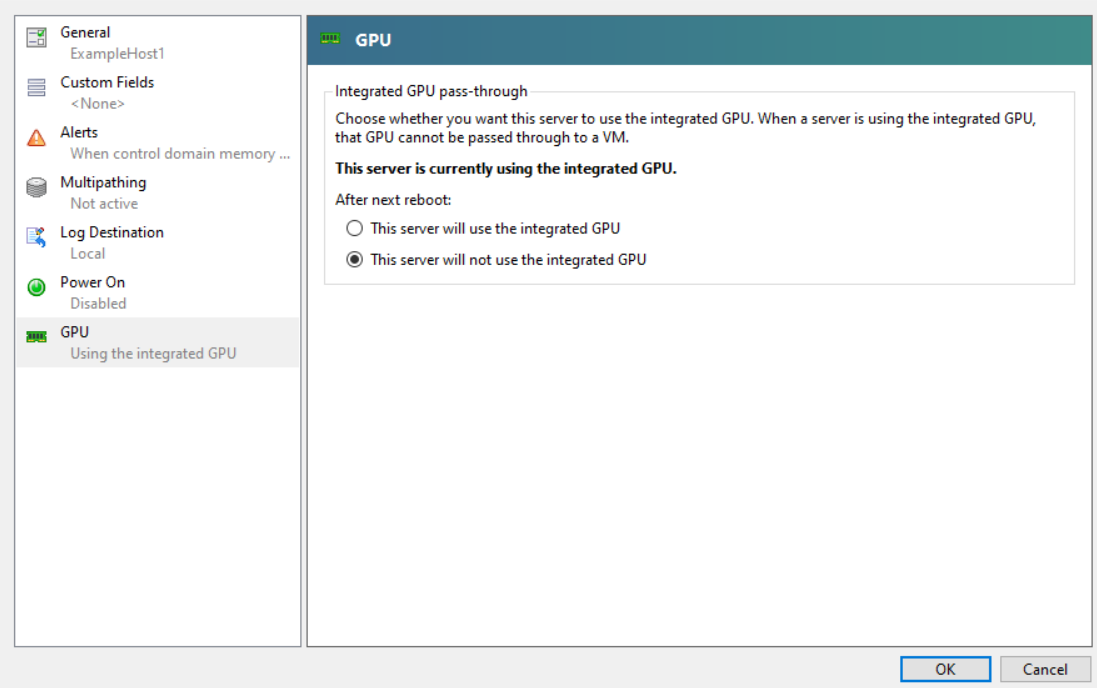

On the General tab, click Properties, and in the left pane, click GPU.

-

In the Integrated GPU passthrough section, select This server will not use the integrated GPU.

This step disables the connection between dom0 and the Intel integrated GPU device.

-

Click OK.

-

Restart the XenServer host for the changes to take effect.

The Intel GPU is now visible on the GPU type list during new VM creation, and on the VM’s Properties tab.

Note:

The XenServer host’s external console output (for example, VGA, HDMI, DP) will not be available after disabling the connection between dom0 and the GPU.