Deployment Guide Citrix ADC VPX on Azure - Disaster Recovery

Contributors

Author: Blake Schindler, Solutions Architect

Overview

Citrix ADC is an application delivery and load balancing solution that provides a high-quality user experience for web, traditional, and cloud-native applications regardless of where they are hosted. It comes in a wide variety of form factors and deployment options without locking users into a single configuration or cloud. Pooled capacity licensing enables the movement of capacity among cloud deployments.

As an undisputed leader of service and application delivery, Citrix ADC is deployed in thousands of networks around the world to optimize, secure, and control the delivery of all enterprise and cloud services. Deployed directly in front of web and database servers, Citrix ADC combines high-speed load balancing and content switching, HTTP compression, content caching, SSL acceleration, application flow visibility, and a powerful application firewall into an integrated, easy-to-use platform. Meeting SLAs is greatly simplified with end-to-end monitoring that transforms network data into actionable business intelligence. Citrix ADC allows policies to be defined and managed using a simple declarative policy engine with no programming expertise required.

Citrix VPX

The Citrix ADC VPX product is a virtual appliance that can be hosted on a wide variety of virtualization and cloud platforms:

-

Citrix Hypervisor™

-

VMware ESX

-

Microsoft Hyper-V

-

Linux KVM

-

Amazon Web Services

-

Microsoft Azure

-

Google Cloud Platform

This deployment guide focuses on Citrix ADC VPX on Microsoft Azure

Microsoft Azure

Microsoft Azure is an ever-expanding set of cloud computing services built to help organizations meet their business challenges. Azure gives users the freedom to build, manage, and deploy applications on a massive, global network using their preferred tools and frameworks. With Azure, users can:

-

Be future-ready with continuous innovation from Microsoft to support their development today and their product visions for tomorrow.

-

Operate hybrid cloud seamlessly on-premises, in the cloud, and at the edge—Azure meets users where they are.

-

Build on their terms with Azure’s commitment to open source and support for all languages and frameworks, allowing users to be free to build how they want and deploy where they want.

-

Trust their cloud with security from the ground up—backed by a team of experts and proactive, industry-leading compliance that is trusted by enterprises, governments, and startups.

Azure Terminology

Here is a brief description of the essential terms used in this document that users must be familiar with:

-

Azure Load Balancer – Azure load balancer is a resource that distributes incoming traffic among computers in a network. Traffic is distributed among virtual machines defined in a load-balancer set. A load balancer can be external or internet-facing, or it can be internal.

-

Azure Resource Manager (ARM) – ARM is the new management framework for services in Azure. Azure Load Balancer is managed using ARM-based APIs and tools.

-

Back-End Address Pool – The back-end address pool is the IP addresses associated with the virtual machine NIC (NIC) to which the load is distributed.

-

BLOB - Binary Large Object – Any binary object like a file or an image that can be stored in Azure storage.

-

Front-End IP Configuration – An Azure Load balancer can include one or more front-end IP addresses, also known as a virtual IPs (VIPs). These IP addresses serve as ingress for the traffic.

-

Instance Level Public IP (ILPIP) – An ILPIP is a public IP address that users can assign directly to a virtual machine or role instance, rather than to the cloud service that the virtual machine or role instance resides in. The ILPIP does not take the place of the VIP (virtual IP) that is assigned to their cloud service. Rather, it is an extra IP address that can be used to connect directly to a virtual machine or role instance.

Note:

In the past, an ILPIP was referred to as a PIP, which stands for public IP.

-

Inbound NAT Rules – This contains rules mapping a public port on the load balancer to a port for a specific virtual machine in the back-end address pool.

-

IP-Config - It can be defined as an IP address pair (public IP and private IP) associated with an individual NIC. In an IP-Config, the public IP address can be NULL. Each NIC can have multiple IP-Configs associated with it, which can be up to 255.

-

Load Balancing Rules – A rule property that maps a given front-end IP and port combination to a set of back-end IP addresses and port combinations. With a single definition of a load balancer resource, users can define multiple load balancing rules, each rule reflecting a combination of a front-end IP and port and back end IP and port associated with virtual machines.

-

Network Security Group (NSG) – NSG contains a list of Access Control List (ACL) rules that allow or deny network traffic to virtual machine instances in a virtual network. NSGs can be associated with either subnets or individual virtual machine instances within that subnet. When an NSG is associated with a subnet, the ACL rules apply to all the virtual machine instances in that subnet. In addition, traffic to an individual virtual machine can be restricted further by associating an NSG directly to that virtual machine.

-

Private IP addresses – Used for communication within an Azure virtual network, and user on-premises network when a VPN gateway is used to extend a user network to Azure. Private IP addresses allow Azure resources to communicate with other resources in a virtual network or an on-premises network through a VPN gateway or ExpressRoute circuit, without using an internet-reachable IP address. In the Azure Resource Manager deployment model, a private IP address is associated with the following types of Azure resources – virtual machines, internal load balancers (ILBs), and application gateways.

-

Probes – This contains health probes used to check availability of virtual machines instances in the back-end address pool. If a particular virtual machine does not respond to health probes for some time, then it is taken out of traffic serving. Probes enable users to track the health of virtual instances. If a health probe fails, the virtual instance is taken out of rotation automatically.

-

Public IP Addresses (PIP) – PIP is used for communication with the Internet, including Azure public-facing services and is associated with virtual machines, internet-facing load balancers, VPN gateways, and application gateways.

-

Region - An area within a geography that does not cross national borders and that contains one or more data centers. Pricing, regional services, and offer types are exposed at the region level. A region is typically paired with another region, which can be up to several hundred miles away, to form a regional pair. Regional pairs can be used as a mechanism for disaster recovery and high availability scenarios. Also referred to generally as location.

-

Resource Group - A container in Resource Manager that holds related resources for an application. The resource group can include all of the resources for an application, or only those resources that are logically grouped.

-

Storage Account – An Azure storage account gives users access to the Azure blob, queue, table, and file services in Azure Storage. A user storage account provides the unique namespace for user Azure storage data objects.

-

Virtual Machine – The software implementation of a physical computer that runs an operating system. Multiple virtual machines can run simultaneously on the same hardware. In Azure, virtual machines are available in various sizes.

-

Virtual Network - An Azure virtual network is a representation of a user network in the cloud. It is a logical isolation of the Azure cloud dedicated to a user subscription. Users can fully control the IP address blocks, DNS settings, security policies, and route tables within this network. Users can also further segment their VNet into subnets and launch Azure IaaS virtual machines and cloud services (PaaS role instances). Also, users can connect the virtual network to their on-premises network using one of the connectivity options available in Azure. In essence, users can expand their network to Azure, with complete control on IP address blocks with the benefit of enterprise scale Azure provides.

Use Cases

Compared to alternative solutions that require each service to be deployed as a separate virtual appliance, Citrix ADC on Azure combines L4 load balancing, L7 traffic management, server offload, application acceleration, application security, and other essential application delivery capabilities in a single VPX instance, conveniently available via the Azure Marketplace. Furthermore, everything is governed by a single policy framework and managed with the same, powerful set of tools used to administer on-premises Citrix ADC deployments. The net result is that Citrix ADC on Azure enables several compelling use cases that not only support the immediate needs of today’s enterprises, but also the ongoing evolution from legacy computing infrastructures to enterprise cloud data centers.

Disaster Recovery (DR)

Disaster is a sudden disruption of business functions caused by natural calamities or human caused events. Disasters affect data center operations, after which resources and the data lost at the disaster site must be fully rebuilt and restored. The loss of data or downtime in the data center is critical and collapses the business continuity.

One of the challenges that customers face today is deciding where to put their DR site. Businesses are looking for consistency and performance regardless of any underlying infrastructure or network faults.

Possible reasons many organizations are deciding to migrate to the cloud are:

-

Usage economics — The capital expense of having a data center on-prem is well documented and by using the cloud, these businesses can free up time and resources from expanding their own systems.

-

Faster recovery times — Much of the automated orchestration enables recovery in mere minutes.

-

Also, there are technologies that help replicate data by providing continuous data protection or continuous snapshots to guard against any outage or attack.

-

Finally, there are use cases where customers need many different types of compliance and security control which are already present on the public clouds. These make it easier to achieve the compliance they need rather than building their own.

A Citrix ADC configured for GSLB forwards traffic to the least-loaded or best-performing data center. This configuration, referred to as an active-active setup, not only improves performance, but also provides immediate disaster recovery by routing traffic to other data centers if a data center that is part of the setup goes down. Citrix ADC thereby saves customers valuable time and money.

Deployment Types

Multi-NIC Multi-IP Deployment (Three-NIC Deployment)

-

Typical Deployments

-

High Availability (HA)

-

Standalone

-

-

Use Cases

-

Multi-NIC Multi-IP Deployments are used to achieve real isolation of data and management traffic.

-

Multi-NIC Multi-IP Deployments also improve the scale and performance of the ADC.

-

Multi-NIC Multi-IP Deployments are used in network applications where throughput is typically 1 Gbps or higher and a Three-NIC Deployment is recommended.

-

Single-NIC Multi-IP Deployment (One-NIC Deployment)

-

Typical Deployments

-

High Availability (HA)

-

Standalone

-

-

Use Cases

-

Internal Load Balancing

-

Typical use case of the Single-NIC Multi-IP Deployment is intranet applications requiring lower throughput (less than 1 Gbps).

-

Citrix ADC Azure Resource Manager Templates

Azure Resource Manager (ARM) Templates provide a method of deploying ADC infrastructure-as-code to Azure simply and consistently. Azure is managed using an Azure Resource Manager (ARM) API. The resources the ARM API manages are objects in Azure such as network cards, virtual machines, and hosted databases. ARM Templates define the objects users want to use along with their types, names, and properties in a JSON file which can be understood by the ARM API. ARM Templates are a way to declare the objects users want, along with their types, names and properties, in a JSON file which can be checked into source control and managed like any other code file. ARM Templates are what really gives users the ability to roll out Azure infrastructure as code.

-

Use Cases

-

Customizing deployment

-

Automating deployment

-

Multi-NIC Multi-IP (Three-NIC) Deployment for DR

Customers would potentially deploy using three-NIC deployment if they are deploying into a production environment where security, redundancy, availability, capacity, and scalability are critical. With this deployment method, complexity and ease of management are not critical concerns to the users.

Single NIC Multi IP (One-NIC) Deployment for DR

Customers would potentially deploy using one-NIC deployment if they are deploying into a non-production environment, they are setting up the environment for testing, or they are staging a new environment before production deployment. Another potential reason for using one-NIC deployment is that customers want to deploy direct to the cloud quickly and efficiently. Finally, one-NIC deployment would be used when customers seek the simplicity of a single subnet configuration.

Azure Resource Manager Template Deployment

Customers would deploy using Azure Resource Manager (ARM) Templates if they are customizing their deployments or they are automating their deployments.

Network Architecture

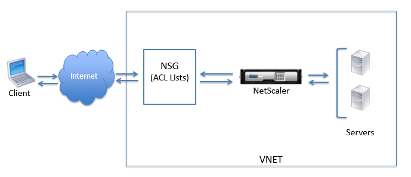

In ARM, a Citrix ADC VPX virtual machine (VM) resides in a virtual network. A virtual NIC (NIC) is created on each Citrix ADC VM. The network security group (NSG) configured in the virtual network is bound to the NIC, and together they control the traffic flowing into the VM and out of the VM.

The NSG forwards the requests to the Citrix ADC VPX instance, and the VPX instance sends them to the servers. The response from a server follows the same path in reverse. The NSG can be configured to control a single VPX VM, or, with subnets and virtual networks, can control traffic in multiple VPX VM deployments.

The NIC contains network configuration details such as the virtual network, subnets, internal IP address, and Public IP address.

While on ARM, it is good to know the following IP addresses that are used to access the VMs deployed with a single NIC and a single IP address:

-

Public IP (PIP) address is the internet-facing IP address configured directly on the virtual NIC of the Citrix ADC VM. This allows users to directly access a VM from the external network.

-

Citrix ADC IP (NSIP) address is an internal IP address configured on the VM. It is non-routable.

-

Virtual IP address (VIP) is configured by using the NSIP and a port number. Clients access Citrix ADC services through the PIP address, and when the request reaches the NIC of the Citrix ADC VPX VM or the Azure load balancer, the VIP gets translated to internal IP (NSIP) and internal port number.

-

Internal IP address is the private internal IP address of the VM from the virtual network’s address space pool. This IP address cannot be reached from the external network. This IP address is by default dynamic unless users set it to static. Traffic from the internet is routed to this address according to the rules created on the NSG. The NSG works with the NIC to selectively send the right type of traffic to the right port on the NIC, which depends on the services configured on the VM.

The following figure shows how traffic flows from a client to a server through a Citrix ADC VPX instance provisioned in ARM.

Deployment Steps

When users deploy a Citrix ADC VPX instance on Microsoft Azure Resource Manager (ARM), they can use the Azure cloud computing capabilities and use Citrix ADC load balancing and traffic management features for their business needs. Users can deploy Citrix ADC VPX instances on Azure Resource Manager either as standalone instances or as high availability pairs in active-standby modes.

But users can deploy a Citrix ADC VPX instance on Microsoft Azure in either of two ways:

-

Through the Azure Marketplace. The Citrix ADC VPX virtual appliance is available as an image in the Microsoft Azure Marketplace.

-

Using the Citrix ADC Azure Resource Manager (ARM) json template available on GitHub. For more information, see: Citrix ADC Azure Templates.

How a Citrix ADC VPX Instance Works on Azure

In an on-premises deployment, a Citrix ADC VPX instance requires at least three IP addresses:

-

Management IP address, called NSIP address

-

Subnet IP (SNIP) address for communicating with the server farm

-

Virtual server IP (VIP) address for accepting client requests

For more information, see: Network Architecture for Citrix ADC VPX Instances on Microsoft Azure.

Note:

VPX virtual appliances can be deployed on any instance type that has two or more cores and more than 2 GB memory.

In an Azure deployment, users can provision a Citrix ADC VPX instance on Azure in three ways:

-

Multi-NIC multi-IP architecture

-

Single NIC multi IP architecture

-

ARM (Azure Resource Manager) templates

Depending on requirements, users can deploy any of these supported architecture types.

Multi-NIC Multi-IP Architecture (Three-NIC)

In this deployment type, users can have more than one network interfaces (NICs) attached to a VPX instance. Any NIC can have one or more IP configurations - static or dynamic public and private IP addresses assigned to it.

Refer to the following use cases:

-

Configure a High-Availability Setup with Multiple IP Addresses and NICs

-

Configure a High-Availability Setup with Multiple IP Addresses and NICs by using PowerShell Commands

Configure a High-Availability Setup with Multiple IP Addresses and NICs

In a Microsoft Azure deployment, a high-availability configuration of two Citrix ADC VPX instances is achieved by using the Azure Load Balancer (ALB). This is achieved by configuring a health probe on ALB, which monitors each VPX instance by sending health probes at every 5 seconds to both primary and secondary instances.

In this setup, only the primary node responds to health probes and the secondary does not. Once the primary sends the response to the health probe, the ALB starts sending the data traffic to the instance. If the primary instance misses two consecutive health probes, ALB does not redirect traffic to that instance. On failover, the new primary starts responding to health probes and the ALB redirects traffic to it. The standard VPX high availability failover time is three seconds. The total failover time that might occur for traffic switching can be a maximum of 13 seconds.

Users can deploy a pair of Citrix ADC VPX instances with multiple NICs in an active-passive high availability (HA) setup on Azure. Each NIC can contain multiple IP addresses.

The following options are available for a multi-NIC high availability deployment:

-

High availability using Azure availability set

-

High availability using Azure availability zones

For more information about Azure Availability Set and Availability Zones, see the Azure documentation: Manage the Availability of Linux Virtual Machines.

High Availability using Availability Set

A high availability setup using availability set must meet the following requirements:

-

An HA Independent Network Configuration (INC) configuration

-

The Azure Load Balancer (ALB) in Direct Server Return (DSR) mode

All traffic goes through the primary node. The secondary node remains in standby mode until the primary node fails.

Note:

For a Citrix VPX high availability deployment on Azure cloud to work, users need a floating public IP (PIP) that can be moved between the two VPX nodes. The Azure Load Balancer (ALB) provides that floating PIP, which is moved to the second node automatically in the event of a failover.

For a Citrix VPX high availability deployment on Azure cloud to work, users need a floating public IP (PIP) that can be moved between the two VPX nodes. The Azure Load Balancer (ALB) provides that floating PIP, which is moved to the second node automatically in the event of a failover.

In an active-passive deployment, the ALB front end public IP (PIP) addresses are added as the VIP addresses in each VPX node. In an HA-INC configuration, the VIP addresses are floating and the SNIP addresses are instance specific.

Users can deploy a VPX pair in active-passive high availability mode in two ways by using:

-

Citrix ADC VPX standard high availability template: use this option to configure an HA pair with the default option of three subnets and six NICs.

-

Windows PowerShell commands: use this option to configure an HA pair according to your subnet and NIC requirements.

This section describes how to deploy a VPX pair in active-passive HA setup by using the Citrix template. If users want to deploy with PowerShell commands, see: Configure a High-Availability Setup with Multiple IP Addresses and NICs by using PowerShell Commands.

Configure HA-INC Nodes by using the Citrix High Availability Template

Users can quickly and efficiently deploy a pair of VPX instances in HA-INC mode by using the standard template. The template creates two nodes, with three subnets and six NICs. The subnets are for management, client, and server-side traffic, and each subnet has two NICs for both of the VPX instances.

Users can get the Citrix ADC 12.1 HA Pair template at the Azure Marketplace by visiting: Azure Marketplace/Citrix ADC 12.1 (High Availability).

Complete the following steps to launch the template and deploy a high availability VPX pair, by using Azure Availability Sets.

-

From Azure Marketplace, select and initiate the Citrix solution template. The template appears.

-

Ensure deployment type is Resource Manager and select Create.

-

The Basics page appears. Create a Resource Group and select OK.

-

The General Settings page appears. Type the details and select OK.

-

The Network Setting page appears. Check the VNet and subnet configurations, edit the required settings, and select OK.

-

The Summary page appears. Review the configuration and edit accordingly. Select OK to confirm.

-

The Buy page appears. Select Purchase to complete the deployment.

It might take a moment for the Azure Resource Group to be created with the required configurations. After completion, select the Resource Group in the Azure portal to see the configuration details, such as LB rules, back-end pools, health probes, and so on. The high availability pair appears as ns-vpx0 and ns-vpx1.

If further modifications are required for the HA setup, such as creating more security rules and ports, users can do that from the Azure portal.

Next, users need to configure the load-balancing virtual server with the ALB’s Frontend public IP (PIP) address, on the primary node. To find the ALB PIP, select ALB > Frontend IP configuration.

See the Resources section for more information about how to configure the load-balancing virtual server.

Resources:

The following links provide additional information related to HA deployment and virtual server (virtual server) configuration:

Related resources:

-

Configure a High-Availability Setup with Multiple IP Addresses and NICs by using PowerShell Commands

High Availability using Availability Zones

Azure Availability Zones are fault-isolated locations within an Azure region, providing redundant power, cooling, and networking and increasing resiliency. Only specific Azure regions support Availability Zones. For more information, see the Azure documentation: Regions and Availability Zones in Azure.

Users can deploy a VPX pair in high availability mode by using the template called “NetScaler 13.0 HA using Availability Zones,” available in Azure Marketplace.

Complete the following steps to launch the template and deploy a high availability VPX pair, by using Azure Availability Zones.

-

From Azure Marketplace, select and initiate the Citrix solution template.

-

Ensure deployment type is Resource Manager and select Create.

-

The Basics page appears. Enter the details and click OK.

Note:

Ensure that an Azure region that supports Availability Zones is selected. For more information about regions that support Availability Zones, see Azure documentation: Regions and Availability Zones in Azure.

Ensure that an Azure region that supports Availability Zones is selected. For more information about regions that support Availability Zones, see Azure documentation: Regions and Availability Zones in Azure.

-

The General Settings page appears. Type the details and select OK.

-

The Network Setting page appears. Check the VNet and subnet configurations, edit the required settings, and select OK.

-

The Summary page appears. Review the configuration and edit accordingly. Select OK to confirm.

-

The Buy page appears. Select Purchase to complete the deployment.

It might take a moment for the Azure Resource Group to be created with the required configurations. After completion, select the Resource Group to see the configuration details, such as LB rules, back-end pools, health probes, and so on, in the Azure portal. The high availability pair appears as ns-vpx0 and ns-vpx1. Also, users can see the location under the Location column.

If further modifications are required for the HA setup, such as creating more security rules and ports, users can do that from the Azure portal.

Single NIC Multi IP Architecture (One-NIC)

In this deployment type, one network interface (NIC) is associated with multiple IP configurations - static or dynamic public and private IP addresses assigned to it. For more information, refer to the following use cases:

Configure Multiple IP Addresses for a Citrix ADC VPX Standalone Instance

This section explains how to configure a standalone Citrix ADC VPX instance with multiple IP addresses, in Azure Resource Manager (ARM). The VPX instance can have one or more NICs attached to it, and each NIC can have one or more static or dynamic public and private IP addresses assigned to it. Users can assign multiple IP addresses as NSIP, VIP, SNIP, and so on.

For more information, refer to the Azure documentation: Assign Multiple IP Addresses to Virtual Machines using the Azure Portal.

If you want to deploy using PowerShell commands, see Configure Multiple IP Addresses for a Citrix ADC VPX Standalone Instance by using PowerShell Commands.

Standalone Citrix ADC VPX with Single NIC Use Case

In this use case, a standalone Citrix ADC VPX appliance is configured with a single NIC that is connected to a virtual network (VNET). The NIC is associated with three IP configurations (ipconfig), each serves a different purpose - as shown in the table:

| IPconfig | Associated with | Purpose |

|---|---|---|

| ipconfig1 | Static IP address; | Serves management traffic |

| static private IP address | ||

| ipconfig2 | Static public IP address | Serves client-side traffic |

| static private IP address | ||

| ipconfig3 | Static private IP address | Communicates with back-end servers |

Note:

IPConfig-3 is not associated with any public IP address.

In a multi-NIC, multi-IP Azure Citrix ADC VPX deployment, the private IP associated with the primary (first) IPConfig of the primary (first) NIC is automatically added as the management NSIP of the appliance. The remaining private IP addresses associated with the IPConfigs need to be added in the VPX instance as a VIP or SNIP by using the

add ns ipcommand, according to user requirements.

Before starting Deployment

Before users begin deployment, they must create a VPX instance using the steps that follow.

For this use case, the NSDoc0330VM VPX instance is created.

Configure Multiple IP Addresses for a Citrix ADC VPX Instance in Standalone Mode

-

Add IP addresses to the VM

-

Configure Citrix ADC -owned IP addresses

Step 1: Add IP Addresses to the VM

-

In the portal, click More services > type virtual machines in the filter box, and then click Virtual machines.

-

In the Virtual machines blade, click the VM you want to add IP addresses to. Click Network interfaces in the virtual machine blade that appears, and then select the network interface.

In the blade that appears for the NIC selected, click IP configurations. The existing IP configuration that was assigned when the VM was created, ipconfig1, is displayed. For this use case, make sure the IP addresses associated with ipconfig1 are static. Next, create two more IP configurations: ipconfig2 (VIP) and ipconfig3 (SNIP).

To create more IP configurations, click Add.

In the Add IP configuration window, enter a Name, specify the allocation method as Static, enter an IP address (192.0.0.5 for this use case), and enable Public IP address.

Note:

Before you add a static private IP address, check for IP address availability and make sure the IP address belongs to the same subnet to which the NIC is attached.

Next, click Configure required settings to create a static public IP address for ipconfig2.

By default, public IPs are dynamic. To make sure that the VM always uses the same public IP address, create a static Public IP.

In the Create public IP address blade, add a Name, under Assignment click Static. And then click OK.

Note:

Even when users set the allocation method to static, they cannot specify the actual IP address assigned to the public IP resource. Instead, it gets allocated from a pool of available IP addresses in the Azure location where the resource is created.

Follow the steps to add one more IP configuration for ipconfig3. Public IP is not mandatory.

Step 2: Configure Citrix ADC-owned IP Addresses

Configure the Citrix ADC-owned IP addresses by using the GUI or the command add ns ip. For more information, refer to: Configuring Citrix ADC-owned IP Addresses.

For more information about how to deploy a Citrix ADC VPX instance on Microsoft Azure, see Deploy a Citrix ADC VPX Instance on Microsoft Azure.

For more information about how a Citrix ADC VPX instance works on Azure, see How a Citrix ADC VPX Instance Works on Azure.

ARM (Azure Resource Manager) Templates

The GitHub repository for Citrix ADC ARM (Azure Resource Manager) templates hosts Citrix ADC custom templates for deploying Citrix ADC in Microsoft Azure Cloud Services here: Citrix ADC Azure Templates. All templates in this repository were developed and are maintained by the Citrix ADC engineering team.

Each template in this repository has co-located documentation describing the usage and architecture of the template. The templates attempt to codify the recommended deployment architecture of the Citrix ADC VPX, or to introduce the user to the Citrix ADC or to demonstrate a particular feature, edition, or option. Users can reuse, modify, or enhance the templates to suit their particular production and testing needs. Most templates require sufficient subscriptions to portal.azure.com to create resource and deploy templates.

Citrix ADC VPX Azure Resource Manager (ARM) templates are designed to ensure an easy and consistent way of deploying standalone Citrix ADC VPX. These templates increase reliability and system availability with built-in redundancy. These ARM templates support Bring Your Own License (BYOL) or Hourly based selections. Choice of selection is either mentioned in the template description or offered during template deployment. For more information about how to provision a Citrix ADC VPX instance on Microsoft Azure using ARM (Azure Resource Manager) templates, visit: Citrix Azure ADC Templates.

Prerequisites

Users need some prerequisite knowledge before deploying a Citrix VPX instance on Azure:

-

Familiarity with Azure terminology and network details. For information, see the Azure terminology in the preceding section.

-

Knowledge of a Citrix ADC appliance. For detailed information about the Citrix ADC appliance, see Citrix ADC 13.0.

-

Knowledge of Citrix ADC networking. See the Networking topic here: Networking.

Limitations

Running the Citrix ADC VPX load balancing solution on ARM imposes the following limitations:

-

The Azure architecture does not accommodate support for the following Citrix ADC features:

-

Clustering

-

IPv6

-

Gratuitous ARP (GARP)

-

L2 Mode (bridging). Transparent virtual servers are supported with L2 (MAC rewrite) for servers in the same subnet as the SNIP.

-

Tagged VLAN

-

Dynamic Routing

-

Virtual MAC

-

USIP

-

Jumbo Frames

-

-

If you think you might have to shut down and temporarily deallocate the Citrix ADC VPX virtual machine at any time, assign a static Internal IP address while creating the virtual machine. If you do not assign a static internal IP address, Azure might assign the virtual machine a different IP address each time it restarts, and the virtual machine might become inaccessible.

-

In an Azure deployment, only the following Citrix ADC VPX models are supported: VPX 10, VPX 200, VPX 1000, and VPX 3000. For more information, see the Citrix ADC VPX Data Sheet.

-

If a Citrix ADC VPX instance with a model number higher than VPX 3000 is used, the network throughput might not be the same as specified by the instance’s license. However, other features, such as SSL throughput and SSL transactions per second, might improve.

-

The “deployment ID” that is generated by Azure during virtual machine provisioning is not visible to the user in ARM. Users cannot use the deployment ID to deploy a Citrix ADC VPX appliance on ARM.

-

The Citrix ADC VPX instance supports 20 Mb/s throughput and standard edition features when it is initialized.

-

For a XenApp and XenDesktop® deployment, a VPN virtual server on a VPX instance can be configured in the following modes:

-

Basic mode, where the ICAOnly VPN virtual server parameter is set to ON. The Basic mode works fully on an unlicensed Citrix ADC VPX instance.

-

Smart-Access mode, where the ICAOnly VPN virtual server parameter is set to OFF. The Smart-Access mode works for only 5 Citrix ADC AAA session users on an unlicensed Citrix ADC VPX instance.

-

Note:

To configure the Smart Control feature, users must apply a Premium license to the Citrix ADC VPX instance.

Azure-VPX Supported Models and Licensing

In an Azure deployment, only the following Citrix ADC VPX models are supported: VPX 10, VPX 200, VPX 1000, and VPX 3000. For more information, see the Citrix ADC VPX Data Sheet.

A Citrix ADC VPX instance on Azure requires a license. The following licensing options are available for Citrix ADC VPX instances running on Azure.

- Subscription-based licensing: Citrix ADC VPX appliances are available as paid instances on Azure Marketplace. Subscription based licensing is a pay-as-you-go option. Users are charged hourly. The following VPX models and license types are available on Azure Marketplace:

| VPX Model | License Type |

|---|---|

| VPX10 | Standard, Advanced, Premium |

| VPX200 | Standard, Advanced, Premium |

| VPX1000 | Standard, Advanced, Premium |

| VPX3000 | Standard, Advanced, Premium |

-

Bring your own license (BYOL): If you bring your own license (BYOL), see the VPX Licensing Guide at: CTX122426/NetScaler VPX and CloudBridge VPX Licensing Guide. Users have to:

-

Use the licensing portal within MyCitrix to generate a valid license.

-

Upload the license to the instance.

-

-

Citrix ADC VPX Check-In/Check-Out licensing: For more information, see: Citrix ADC VPX Check-in and Check-out Licensing.

Starting with NetScaler release 12.0 56.20, VPX Express for on-premises and cloud deployments does not require a license file. For more information on Citrix ADC VPX Express see the “Citrix ADC VPX Express license” section in Licensing Overview.

Note: Regardless of the subscription-based hourly license bought from Azure Marketplace, in rare cases, the Citrix ADC VPX instance deployed on Azure might come up with a default NetScaler® license. This happens due to issues with Azure Instance Metadata Service (IMDS).

Do a warm restart before making any configuration change on the Citrix ADC VPX instance, to enable the correct Citrix ADC VPX license.

Port Usage Guidelines

Users can configure more inbound and outbound rules in NSG while creating the NetScaler VPX™ instance or after the virtual machine is provisioned. Each inbound and outbound rule is associated with a public port and a private port.

Before you configure NSG rules, note the following guidelines regarding the port numbers you can use:

-

The NetScaler VPX instance reserves the following ports. Users cannot define these as private ports when using the Public IP address for requests from the internet. Ports 21, 22, 80, 443, 8080, 67, 161, 179, 500, 520, 3003, 3008, 3009, 3010, 3011, 4001, 5061, 9000, 7000. However, if users want internet-facing services such as the VIP to use a standard port (for example, port 443) users have to create port mapping by using the NSG. The standard port is then mapped to a different port that is configured on the Citrix ADC VPX for this VIP service. For example, a VIP service might be running on port 8443 on the VPX instance but be mapped to public port 443. So, when the user accesses port 443 through the Public IP, the request is directed to private port 8443.

-

The Public IP address does not support protocols in which port mapping is opened dynamically, such as passive FTP or ALG.

-

High availability does not work for traffic that uses a public IP address (PIP) associated with a VPX instance, instead of a PIP configured on the Azure load balancer. For more information, see: Configure a High-Availability Setup with a Single IP Address and a Single NIC.

-

In a NetScaler Gateway deployment, users need not configure a SNIP address, because the NSIP can be used as a SNIP when no SNIP is configured. Users must configure the VIP address by using the NSIP address and some nonstandard port number. For call-back configuration on the back-end server, the VIP port number has to be specified along with the VIP URL (for example,

url: port).

Note:

In Azure Resource Manager, a Citrix ADC VPX instance is associated with two IP addresses - a public IP address (PIP) and an internal IP address. While the external traffic connects to the PIP, the internal IP address or the NSIP is non-routable. To configure a VIP in VPX, use the internal IP address (NSIP) and any of the free ports available. Do not use the PIP to configure a VIP.

For example, if the NSIP of a Citrix ADC VPX instance is 10.1.0.3 and an available free port is 10022, then users can configure a VIP by providing the 10.1.0.3:10022 (NSIP address + port) combination.

In this article

- Contributors

- Overview

- Citrix VPX

- Microsoft Azure

- Azure Terminology

- Use Cases

- Disaster Recovery (DR)

- Deployment Types

- Multi-NIC Multi-IP (Three-NIC) Deployment for DR

- Single NIC Multi IP (One-NIC) Deployment for DR

- Azure Resource Manager Template Deployment

- Network Architecture

- Deployment Steps

- Multi-NIC Multi-IP Architecture (Three-NIC)

- High Availability using Availability Set

- Single NIC Multi IP Architecture (One-NIC)

- ARM (Azure Resource Manager) Templates

- Prerequisites

- Limitations

- Azure-VPX Supported Models and Licensing

- Port Usage Guidelines