Size and scale considerations for Cloud Connectors

When evaluating Citrix DaaS for sizing and scalability, consider all the components. Research and test the configuration of Citrix Cloud™ Connectors and StoreFront for your specific requirements. Providing insufficient resources for sizing and scalability negatively affects the performance of your deployment.

Note:

- These recommendations apply to Citrix DaaS Standard for Azure in addition to Citrix DaaS.

- The tests and recommendations given in this article are guidelines to help you start your testing. We recommend that you perform the testing in your environment to validate the correct connector sizing.

- For sizing and scale considerations for HDX proxy, see Cloud Connector sizing and scale considerations for HDX proxy.

This article provides details of the tested maximum capacities and best practice recommendations for Cloud Connector machine configuration. Tests were performed on deployments configured with StoreFront™ and Local Host Cache (LHC).

The information provided applies to deployments in which each resource location contains both VDI workloads and RDS workloads.

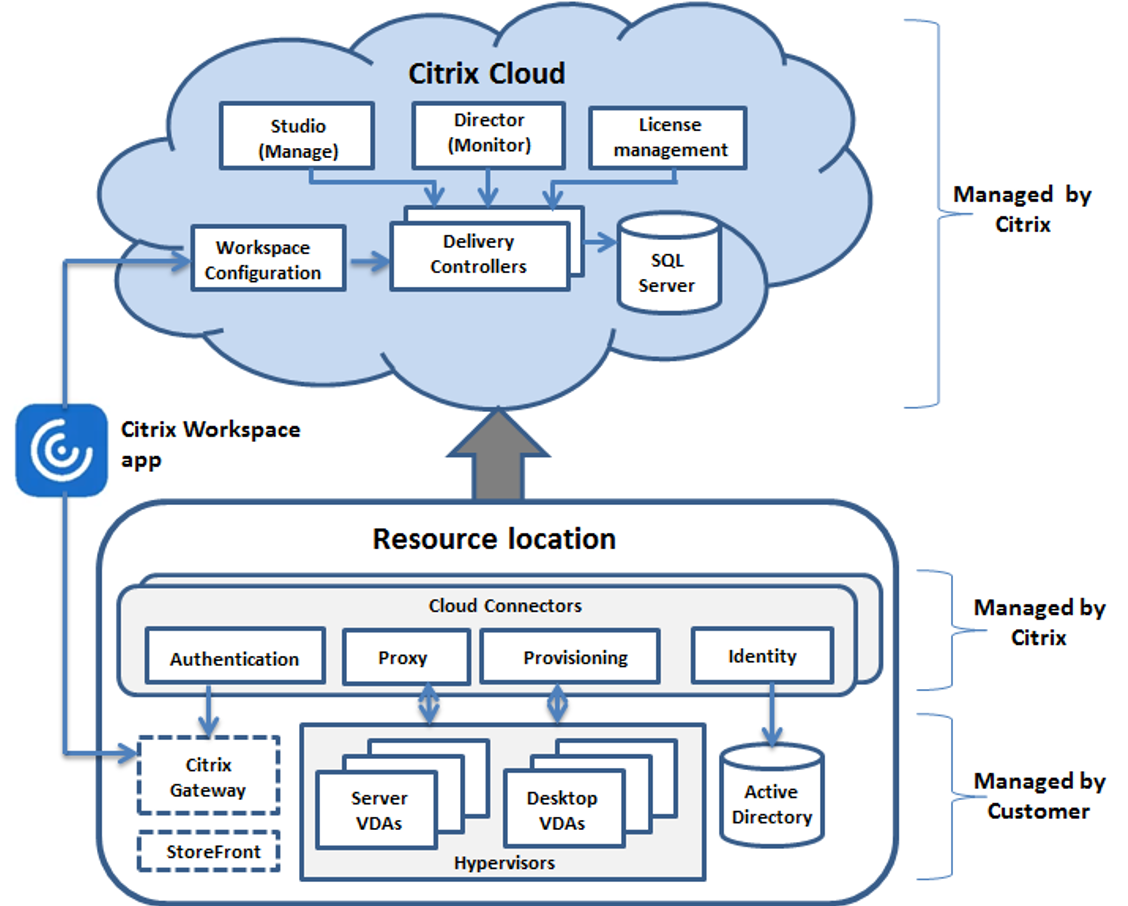

The Cloud Connector links your workloads to Citrix DaaS™ in the following ways:

- Provides a proxy for communication between your VDAs and Citrix DaaS.

- Provides a proxy for communication between Citrix DaaS and your Active Directory (AD) and hypervisors.

- In deployments that include StoreFront servers, the Cloud Connector serves as a temporary session broker during cloud outages, providing users with continued access to resources.

It is important to have your Cloud Connectors properly sized and configured to meet your specific needs. While tests were run with two Cloud Connectors, only one Cloud Connector is available during Cloud Connector upgrades. To ensure high availability during Cloud Connector upgrades, some customers have opted to deploy three Cloud Connectors.

Each set of Cloud Connectors is assigned to a resource location (also known as a zone in Studio). A resource location is a logical separation that specifies which resources communicate with that set of Cloud Connectors. At least one resource location is required per domain to communicate with the Active Directory (AD).

Each machine catalog and hosting connection is assigned to a resource location.

For deployments with more than one resource location, assign machine catalogs and VDAs to the resource locations to optimize the ability of LHC to broker connections during outages. For more information on creating and managing resource locations, see Connect to Citrix Cloud. For optimum performance, configure your Cloud Connectors on low-latency connections to VDAs, AD servers, and hypervisors.

Recommended processors and storage

For performance similar to that seen in these tests, use modern processors that support SHA extensions. SHA extensions reduce the cryptographic load on the CPU. Recommended processors include:

- Advanced Micro Devices (AMD) Zen and newer processors

- Intel Ice Lake and newer processors

The recommended processors run efficiently. You can use older processors, however, it might lead to a higher CPU load. We recommend increasing your vCPU count to offset this behavior.

The tests described in this article were performed with AMD EPYC and Intel Cascade Lake processors.

Cloud Connectors have a heavy cryptographic load while communicating with the cloud. Cloud Connectors using processors with SHA extensions experience lower load on their CPU which is expressed by lower CPU usage by the Windows Local Security Authority Subsystem Service (LSASS).

Citrix recommends using modern storage with adequate I/O operations per second (IOPS), especially for deployments that use LHC. Solid state drives (SSDs) are suggested but premium cloud storage tiers are not needed. Higher IOPS are needed for LHC scenarios where the Cloud Connector runs a small copy of the database. This database is updated with site configuration changes regularly and provides brokering capabilities to the resource location in times of Citrix Cloud outages.

Recommended compute configuration for Local Host Cache

Local Host Cache (LHC) provides high availability by enabling connection brokering operations in a deployment to continue when a Cloud Connector cannot communicate with Citrix Cloud.

Cloud Connectors run Microsoft SQL Express Server LocalDB, which is automatically installed when you install the Cloud Connector. The CPU configuration of the Cloud Connector, especially the number of cores available to SQL Express Server LocalDB, directly affects LHC performance. The number of CPU cores available to SQL Server Express Server LocalDB affects LHC performance even more than memory allocation does. This CPU overhead is observed only when in LHC mode when Citrix DaaS is not reachable, and the LHC broker is active. For any deployment using LHC, Citrix recommends four cores per socket, with a minimum of four CPU cores per Cloud Connector. For information on configuring compute resources for SQL Express Server LocalDB, see Compute capacity limits by edition of SQL Server.

If compute resources available to the SQL Express Server LocalDB are misconfigured, configuration synchronization times might be increased and performance during outages might be reduced. In some virtualized environments, compute capacity might depend on the number of logical processors and not CPU cores.

Summary of test findings

All results in this summary are based on the findings from a test environment as configured in the detailed sections of this article. The results shown here are for a single resource location. Different system configurations might yield different results.

This illustration gives a graphical overview of the tested configuration.

The following table shows the minimum recommended Cloud Connector CPU and memory configurations for sites of various sizes. Results of testing with these configurations are shown below. See Limits for more information on the resource location limits.

| Medium | Large | Maximum | |

|---|---|---|---|

| Connectors for HA | 2 | 2 | 3 |

| VDAs | Up to 1000 | 1001 - 5000 | 5001 - 10,000 |

| Sessions | Up to 2500 | Up to 10,000 | Up to 25,000 |

| Hosting connections | Up to 20 | Up to 40 | Up to 40 |

| CPUs for Cloud Connectors | 4vCPU | 4vCPU | 8vCPU |

| Memory for Cloud Connectors | 6 GB | 8 GB | 10 GB |

Note:

If your deployment exceeds 5000 VDAs, you must use three Cloud Connectors for high availability and scalability.

Test methodology

Tests were conducted to add load and to measure the performance of the environment components. The components were monitored by collecting performance data and procedure timing, such as logon time and registration time. Sometimes, proprietary Citrix simulation tools are used to simulate VDAs and sessions. These tools are designed to exercise Citrix components the same way that traditional VDAs and sessions do, without the same resource requirements to host real sessions and VDAs. Tests were conducted in both cloud brokering and LHC mode for scenarios with Citrix StoreFront.

Recommendations for Cloud Connector sizing in this article are based on data gathered from these tests.

The following tests were run:

- Session logon/launch storm: a test that simulates high-volume logon periods.

- VDA registration storm: a test that simulates high-volume VDA registration periods. For example, following an upgrade cycle or transitioning between cloud brokering and Local Host Cache mode.

- VDA power action storm: a test that simulates high-volume of VDA power actions.

Test scenarios and conditions

These tests were performed with LHC configured. For more information about using LHC, see the Local Host Cache article. LHC requires an on-premises StoreFront server. For detailed information about StoreFront, see the StoreFront product documentation.

Recommendations for StoreFront configurations:

- If you have multiple resource locations with a single StoreFront server or server group, enable the advanced health check option for the StoreFront store. See StoreFront requirement in the Local Host Cache article.

- For higher session launch rates, use a StoreFront server group. See Configure server groups in the StoreFront product documentation.

Test conditions:

- CPU and memory requirements are for the base OS and Citrix services only. Third-party apps and services might require additional resources.

- VDAs are any virtual or physical machines running Citrix Virtual Delivery Agent.

- Tests are performed using Windows VDAs only.

- All VDAs tested were power-managed using Citrix DaaS.

- Session were launched at a sustained rate of 1,000 per minute.

- Workloads of 1,000 to 10,000 VDI and 500–10,000 RDS servers with 1,000-25,000 sessions were tested.

- RDS sessions were tested up to 25,000 per resource location.

- Tests were performed using two Cloud Connectors in both normal operations and during outage. Citrix recommends using at least two Cloud Connectors for high availability, and three Cloud Connectors for maximum and large sized resource locations. When in outage mode, only one of the Cloud Connectors is used for VDA registrations and brokering. While tests were run with two Cloud Connectors, only one Cloud Connector is available during upgrades. To ensure high availability during upgrades, some customers have opted to run with three Cloud Connectors.

- Tests were performed with the Cloud Connector configured with Intel Cascade Lake processors.

- Sessions were launched via a single Citrix StoreFront server.

- LHC outage sessions launch tests conducted after machines had re-registered.

RDS session counts are a recommendation and not a limit. Test your own RDS session limit in your environment.

Note:

Session count and launch rate are more important for RDS than the VDA count.

Medium workloads

These workloads were tested with 4 vCPUs and 6 GB memory.

| Test workloads | Site condition | VDA registration time | Registration CPU and memory usage | Launch test length | Session launch CPU and memory usage | Launch rate |

|---|---|---|---|---|---|---|

| 1000 VDI | Online | 5 minutes | CPU maximum = 36%, CPU average = 33%, memory maximum = 5.3 GB | 2 minutes | CPU maximum = 29%, CPU average = 27%, memory maximum = 3.7 GB | 500 per minute |

| 1000 VDI | Outage | 4 minutes | CPU maximum = 11%, CPU average = 10%, memory maximum = 4.5 GB | 2 minutes | CPU maximum = 42%, CPU average = 28%, memory maximum = 4.0 GB | 500 per minute |

| 250 RDS, 5000 sessions | Online | 3 minutes | CPU maximum = 14%, CPU average = 4%, memory maximum = 3.5 GB | 9 minutes | CPU maximum = 46%, CPU average = 21%, memory maximum = 3.7 GB | 555 per minute |

| 250 RDS, 5000 sessions | Outage | 3 minutes | CPU maximum = 15%, CPU average = 5%, memory maximum = 3.7 | 9 minutes | CPU maximum = 51%, CPU average = 32%, memory maximum = 4.2 GB | 555 per minute |

Large workloads

These workloads were tested with 4 vCPUs and 8 GB memory.

| Test workloads | Site condition | VDA registration time | Registration CPU and memory usage | Launch test length | Session launch CPU and memory usage | Launch rate |

|---|---|---|---|---|---|---|

| 5000 VDI | Online | 3–4 minutes | CPU maximum = 45%, CPU average = 25%, memory maximum = 7.0 GB | 5 minutes | CPU maximum = 75%, CPU average = 55%, memory maximum = 7.0 GB | 1000 per minute |

| 5000 VDI | Outage | 4–6 minutes | CPU maximum = 15%, CPU average = 5%, memory maximum = 7.5 GB | 5 minutes | CPU maximum = 45%, CPU average = 40%, memory maximum = 7.5 GB | 1000 per minute |

| 500 RDS, 10,000 sessions | Online | 3 minutes | CPU maximum = 45%, CPU average = 25%, memory maximum = 7.0 GB | 10 minutes | CPU maximum = 75%, CPU average = 55%, memory maximum = 7.0 GB | 1000 per minute |

| 500 RDS, 10,000 sessions | Outage | 3 minutes | CPU maximum = 15%, CPU average = 5%, memory maximum = 7.5 | 10 minutes | CPU maximum = 45%, CPU average = 40%, memory maximum = 7.5 GB | 1000 per minute |

Maximum workloads

These workloads were tested with 8 vCPUs and 10 GB memory.

| Test workloads | Site condition | VDA registration time | Registration CPU and memory usage | Launch test length | Session launch CPU and memory usage | Launch rate |

|---|---|---|---|---|---|---|

| 10,000 VDI | Online | 3–4 minutes | CPU maximum = 85%, CPU average = 10%, memory maximum = 8.5 GB | 7 minutes | CPU maximum = 66%, CPU average = 28%, memory maximum = 7.0 GB | 1400 per minute |

| 10,000 VDI | Outage | 4–5 minutes | CPU maximum = 90%, CPU average = 17%, memory maximum = 8.2 GB | 5 minutes | CPU maximum = 90%, CPU average = 45%, memory maximum = 8.5 GB | 2000 per minute |

| 1000 RDS, 20,000 sessions | Online | 1–2 minutes | CPU maximum = 60%, CPU average = 20%, memory maximum = 8.6 GB | 17 minutes | CPU maximum = 66%, CPU average = 25%, memory maximum = 6.8 GB | 1200 per minute |

| 1000 RDS, 20,000 sessions | Outage | 3–4 minutes | CPU maximum = 22%, CPU average = 10%, memory maximum = 8.5 | 21 minutes | CPU maximum = 90%, CPU average = 50%, memory maximum = 7.5 GB | 1000 per minute |

Note:

The workloads shown here are the maximum recommended workloads for one resource location. To support larger workloads, add more resource locations.

Configuration synchronization resource usages

The configuration synchronization process keeps the Cloud Connectors up to date with Citrix DaaS. Updates are automatically sent to the Cloud Connectors to make sure that the Cloud Connectors are ready to take over brokering if an outage occurs. The configuration synchronization updates the LHC database, SQL Express Server LocalDB. The process imports the data to a temporary database then switches to that database once imported. This ensures that there is always an LHC database ready to take over.

The CPU, memory, and disk usage are temporarily increased while data is imported to the temporary database.

Test conditions:

- Tested on an 8 vCPU AMD EPYC

- The imported site configuration database was for an environment with site-wide total of 80,000 VDAs and 300,000 users (three shifts of 100,000 users)

- Data import time was tested on a resource location with 10,000 VDI

Test results:

- Data import time: 7–10 minutes

-

CPU usage:

- maximum = 25%

- average = 15%

-

Memory usage:

- increase of approximately 2 GB to 3 GB

-

Disk usage:

- 4 MB/s disk read spike

- 18 MB/s disk write spike

- 70 MB/s disk write spike during downloading and writing of xml config files

- 4 MB/s disk read spike at the completion of import

-

LHC database size:

- 400–500 MB database file

- 200–300 MB log database

Additional resource usage considerations:

- During import the full site configuration data is downloaded. This download might cause a memory spike, depending on the site size. If memory spikes are occurring during configuration syncs, consider increasing the size of the Cloud Connectors.

- The tested site used approximately 800 MB for the database and database log files combined. During a configuration synchronization, these files are duplicated with a maximum combined size of approximately 1600 MB. Ensure that your Cloud Connector has enough disk space for the duplicated files. The configuration synchronization process fails if the disk is full.